Flex Logix Releases InferX X1 Edge Inference Co-Processor

May 06, 2019

Press Release

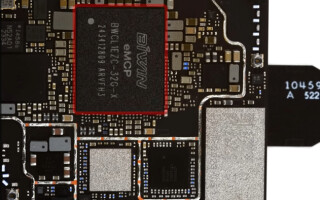

The co-processor is based on an inference-optimized nnMAX core and integrates embedded FPGA (eFPGA) interconnect technology to deliver up to 10 times the throughput of existing edge inferencing ICs.

Mountain View, CA. Flex Logix Technologies, Inc. InferX X1 edge inference co-processor is based on an inference-optimized nnMAX core and integrates embedded FPGA (eFPGA) interconnect technology.

It provides high throughput in edge applications with a single DRAM, yielding much higher throughput/watt than before, and performs optimally at low batch sizes, usually in edge applications where there is only one camera/sensor.

InferX X1 is designed for half-height, half-length PCIe cards for edge servers and gateways and is programmed with the nnMAX Compiler using Tensorflow Lite or ONNX models.

“The difficult challenge in neural network inference is minimizing data movement and energy consumption, which is something our interconnect technology can do amazingly well,” said Flex Logix’s CEO, Geoff Tate. “While processing a layer, the datapath is configured for the entire stage using our reconfigurable interconnect, enabling InferX to operate like an ASIC, then reconfigure rapidly for the next layer. Because most of our bandwidth comes from local SRAM, InferX requires just a single DRAM, simplifying die and package, and cutting cost and power.”

Go to www.flex-logix.com to learn more.