"Contextualizing" sensor fusion algorithm development challenges in smartphones and wearables

November 05, 2015

As we all know well, there are two distinct camps in the smartphone market: Apple and Android. On the one hand you have Apple, whose strict developmen...

As we all know well, there are two distinct camps in the smartphone market: Apple and Android. On the one hand you have Apple, whose strict development policies and well-defined hardware schematics make things pretty straightforward for developers – play by the rules or you’re out, but if you’re in, there’s not much left to guessing.

On the other hand, you have Google and its Android ecosystem. Google has been decidedly indefinite in terms of development guidance on Android platforms, and although Android continues to gain market share, this has led to a lot of “different,” to put it mildly.

The problem here for those working in the Android ecosystem is that in the absence of hard and fast rules, developing fast gets harder. A perfect example of this is in wearables. Say Google floats an update to Android Wear that includes new features a device manufacturer wants to support, such as the movement gestures added via the recent Lollipop 5.1 update that allows users to turn smart watches on and off or cycle screens with the flick of their wrist. Even if the required sensors already exist on the target device, the most complex aspect of enabling these features (both for app developers and the eventual end user) lies in creating sensor fusion algorithms that turn those sensors’ output data into something meaningful. Building these algorithms from scratch is time consuming to say the least, and in a world where Google can issue massive updates with the click of a button, puts hardware manufacturers consistently behind the eight ball.

Co-processing out algorithm development challenges

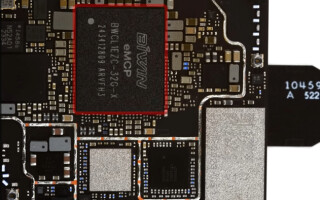

So, how do you get hardware up to speed in the world of continuous delivery and short consumer lifecycles? At the MEMS Executive Congress in Napa, CA, George Hsu, Chairmain of the Board, and Becky Oh, President and CEO of PNI Sensor explained how they’ve taken a step towards an answer with the release of the SENtral-A2 ultra-low-power co-processor (Figure 1).

The SENtral-A2 is the second generation of PNI SENtral sensor hubs for Android Lollipop/Marshmallow sensors that not only includes algorithms for the hardware sensors required for devices to pass Google’s Android Compatibility Test Suite (CTS), but also provides additional, optional algorithms that can be used to support applications from push-up counting to swim stroke recognition. What makes this possible on a single 1.7 mm^2 chip is an architecture that relies on an Algorithm Context Framework so that specific features, such as activity, context, and gesture recognition, can be extracted from sensor outputs for the development of new applications. Using an accelerometer as an example, the SENtral-A2 is able to extract various features from domains the sensor is able to capture, as illustrated in Figures 2 and 3.

![[Figure 2 | Using the SENtral-A2 Context Framework for algorithm creation, features can be extracted based on the physical domain of the sensor output. Shown here is an example of the features achievable from an accelerometer.]](https://data.embeddedcomputing.com/uploads/articles/wp/1955/563b883391eef-Screen+Shot+2015-11-05+at+9.47.17+AM.png)

An added benefit of this approach is that, as with any algorithm, the more data acquired, the more precise the algorithm becomes. As a result, applications are able to evolve into more customized, optimized iterations of themselves as databases of information are compiled that enable algorithms to be fine tuned to the input feature set and desired outcome.

“Context and activity today is lacking a lot of data,” Hsu said in a meeting. “[The SENtral-A2] allows data to be layered and aggregated over time to create new, better sensors.

“There’s got to be a middle ground that allows the ‘instant updates’ of the Google fashion to translate to the development cycles of hardware manufacturers,” Hsu continued. ìThe area is very in flux because of Google’s lack of definition. Everything is subject to change, so everything needs to be redefined.”

A list of the algorithms available in the SENtral-A2 is provided below.

![[Figure 4 | A list of the standard and optional algorithms available in the SENtral-A2.]](https://data.embeddedcomputing.com/uploads/articles/wp/1955/563b85575d72c-Screen+Shot+2015-11-04+at+5.51.17+PM.png)

Sampling of the SENtral-A2 begins in December. For more information, visit www.pnicorp.com/products/sentral-a.

![[Figure 3 | Features can then be used to create classifications for application development, for example, to determine whether a smartphone or wearable is standing, walking, jogging, cycling, or in a vehicle.]](https://data.embeddedcomputing.com/uploads/articles/wp/1955/563b8609edce5-Screen+Shot+2015-11-05+at+9.38.00+AM.png)