COM-HPC Integrates IPMI to Improve QoS for Edge Servers

September 28, 2021

Blog

PICMG launched a COM-HPC interface specification for the management of embedded systems platforms. The goal is to help edge server engineers manage systems remotely. For example, if a system is hung up, an IT administrator can hit reset with the same effect as if he had gone to a factory floor or other site. The specification targets edge computer designs based on COM-HPC Computer-on-Modules with a view to simplifying maintenance and improving quality of service.

Remote management capabilities – up to and including out-of-band management – are standard features for IT administrators. These capabilities encompass monitoring system functionality, installing new updates and patches, and troubleshooting issues without being physically present in server rooms.

It is standard practice for many IT service providers to remotely access customers’ on-premises servers or to host them somewhere in the cloud. The remote management abilities supporting this tried and true practice will extend to edge server and gateway layer technologies with the arrival of the new PICMG COM-HPC interface specification (Figure 1). Enabling digitization and the IIoT, edge server and gateway layer technologies require remote management capabilities to overcome the gap between business-grade IT and industrial-grade operational technology (OT).

Figure 1. The COM-HPC standard is designed for the new edge computing layer, which is distributed. Service providers for this new IT layer therefore need comprehensive remote management features similar to distributed on-premises or cloud equipment.

Engineers designing edge layer platforms based on Computer-on-Modules typically want to implement these capabilities in a way that can be tailored to specific demands. Addressing this want, PICMG introduced a COM-HPC sub-specification for system management. So as not to reinvent the wheel, parts of the COM-HPC sub-specification will draw on the Intelligent Platform Management Interface (IPMI) specification.

Let’s take a deeper dive into the COM-HPC sub-specification dedicated to the system management interface to understand how it benefits COM-HPC designs.

Longevity and Stability Matter

The job of improving edge server QoS fell to IPMI because it has been around since 1998, has reached a robust state following additional revisions released in 2001 and 2004, and enjoys general acceptance. The PICMG subcommittee also used the Redfish specification, which is based on a Representational State Transfer (RESTful) API and continues to release new features.

The IPMI specification defines the protocols, interfaces, and architecture for monitoring and managing a computer subsystem (Figure 2). IPMI standardizes the format for describing low-level hardware as well as the format for sending and receiving messages from a board management controller (BMC).

Figure 2. IPMI calls can either be sent over the network to a remote system or to a local subsystem. In most cases, the modularity of a system is a reason to extend the IPMI functions also to subsystems – such as Computer-on-Modules.

IPMI messages can either be sent over the network to a remote system’s BMC or from a BMC to a local subsystem, such as a power supply. This versatility with regard to sending IPMI messages makes it possible to divide complex administrative tasks into several subareas.

The messages can query the current state of the hardware or direct the BMC to act – for example, instruct the BMC to increase the system cooling, tell the system to reboot, or read a sensor. Enabling management tasks to be offloaded to a dedicated physical hardware component lessens the burden on the host hardware and operating system. The IPMI specification also decouples system management from the target platform so that system management functions can be initiated even when the target platform is down.

All these functions have made the IPMI specification a de facto standard for managing server hardware. The specification’s longevity is ensured because the developers of the specification deliberately kept the required commands very simple, leaving no room for misunderstanding.

The IPMI specification’s flexible framework enables adding new Network Functions (NetFn) and instructions beyond the original specification’s mandatory and optional commands. Various industry working groups have already benefitted from this freedom and defined their own specific Network Functions and commands to handle technologies and features that were not thought of during the specification’s creation.

Many Remote Management Options

For Computer-on-Modules systems, the flexible framework simplifies making the adjustments necessary for adding remote management. One adjustment concerned the COM-HPC Embedded EEPROM (EEEP). The EEEP contains information on vendors, memory slots, networking capabilities, and more. Much of this information is the same as that stored in the IPMI Field Replaceable Unit (FRU). To avoid duplicating this data, the COM-HPC remote management features include recommendations on how an IPMI device should populate the FRU with the information contained in the EEEP device.

Given the wide range of markets for COM-HPC modules, including remote data centers, fog/edge servers, and distant installations, having a flexible range of remote management options is important. The developers also had to take into account that the standard specifies very different maturity levels of IPMI support for modules and carrier boards.

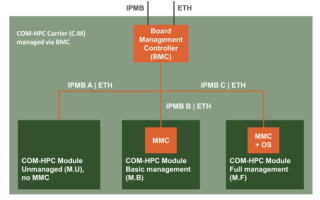

Modules’ IPMI maturity levels range from unmanaged modules (M.U) and basic managed modules (M.B) to fully managed modules (M.F). Carrier board levels range from unmanaged (C.U) to managed carrier boards (C.M). The differences are explained in detail in the specification, but what’s most important to know at this stage is that all those modules and carrier boards remain interoperable.

The COM-HPC IPMI specification allows all types of carrier boards to operate correctly with all types of modules.

Flexible Management and Control of Platforms

The PICMG COM-HPC IPMI subcommittee realized that the various scenarios where basic management features were needed would not be served by a one-size-fits-all solution. So, several module and carrier design combinations are available for tasks such as powering the system on and off or telling the system to get network information.

For instance, when working with a single carrier board with up to four modules, it is more efficient for each module to have independent full management capabilities. However, a different scenario could benefit from a fully fledged IPMI implementation on the carrier board, enabling customization for specific functions regardless of whether the module is managed or unmanaged (Figure 3).

Figure 3. Modules and carrier boards can have different maturity levels of IPMI support but will remain interoperable with each other, enabling various system setups – from a single unmanaged carrier with four managed modules, to a managed carrier with unmanaged modules.

There will always be system designers who want no management features. And there will always be system designers who want minimal management features. So, it was important to prioritize interoperability between all module management tiers. But it was also critical that designers have access to as many resources as possible.

The more access to system resources it is granted, the more powerful IPMI becomes. This relationship between access and power is why the new COM-HPC specification has some specific interfaces that provide the most comprehensive system management functionalities. First among them is the Intelligent Platform Management Bus (IPMB) interface, which allows a carrier board BMC to access the Module Management Controller (MMC).

But the specification is not limited to this bus. One new interface specifically for a carrier board BMC is the dedicated separate PCI Express Lane, which includes and drives a graphics controller.

Additional interfaces dedicated for use by IPMI are an I2C interface, USB ports, and the power button controls. Through these dedicated IPMI channels – also accessible remotely via BMC – system administrators can control nearly the entire platform behavior for best QoS, minimum downtime, and most efficient remote maintenance.

To give a few examples:

- The I2C interface can be used to access the EEEP data on the module.

- The USB ports can be used to emulate USB devices, such as a keyboard and mouse or a DVD drive.

- The power controls can be used to turn the system on/off remotely.

- The power controls can be used to delay the system boot while the BMC performs additional platform initialization.

The new PICMG COM-HPC sub-specification therefore paves the way for comprehensive IPMI platform management functionality. Engineers can start thinking about hardware design schematics for implementing IPMI. At the same time module vendors and their partners can work on BMC and MMC implementations, for example utilizing SP-X and/or open standard firmware such as OpenBMC (Figure 4).

Figure 4. The first congatec COM-HPC Client modules available at the market are equipped with 11 variants of Intel Xeon, Core and Celeron processors (codenamed Tiger Lake U and Tiger Lake H). The congatec starter kit with eval carrier board and cooling solution is already available functionally validated. Customer-specific COM-HPC PMI implementation variants are supported on demand.

OpenBMC is a Linux distribution for management controllers used in servers, top of rack switches, RAID appliances, and other devices. OpenBMC uses Yocto, OpenEmbedded, systemd, and D-Bus for easy customization of platforms. It has full IPMI 2.0 compliance with DCMI and features host management such as power, cooling, LEDs, inventory, events and watchdog.

OpenBMC also offers a broad choice of interfaces ranging from Remote KVM, SSH-based SOL and web-based user interfaces, to REST and D-Bus-based interfaces. Engineers benefit from hardware simulation as well as automated testing functionalities. Code update support for multiple BMC/BIOS images rounds off the recent feature set.

Conclusion

A major benefit for system builders is that, even though the PICMG COM-HPC Computer-on-Modules specification is brand new, it includes proven IPMI and Redfish management technologies upon which to innovate.

This is certain to provide momentum to the acceptance of PICMG’s new COM-HPC Computer-on-Modules specification.