Educating the auto with deep learning for active safety systems

December 14, 2015

The automotive industry is entering the age of artificial intelligence and requires teachers. In this interview with Danny Shapiro, Senior Director of...

The automotive industry is entering the age of artificial intelligence and requires teachers. In this interview with Danny Shapiro, Senior Director of Automotive at NVIDIA, we discuss how deep learning technology is being put to use in the pursuit of fully autonomous vehicles, which NVIDIA forecasts is closer than you might think.

What are the biggest challenges facing developers of automotive safety systems today?

SHAPIRO: We’re working with a wide range of automakers, Tier 1s, and other research institutions on ADAS solutions and fully autonomous [driving]. The biggest challenge is being able to react to the randomness of the outside world; other people driving; and the fact that, in general, no driving situation is the same as another.

Our technology is able to handle this type of diverse environment that the car faces. Using deep learning, using sensor fusion, we’re able to build a complete three-dimensional map of everything that’s going on around the car to enable the car to make decisions much better than a human driver could.

The amount of computation that’s required for this is massive. If you look at any of the prototype self-driving cars on the market today, they all have a trunk full of PCs connected to a range of different sensors. What we’re doing is bringing the power of a supercomputer down into an automotive package that can be placed on the side of the trunk compartment, for example. We’ll then be able to take that inputs from cameras, from radar, from lidar and be able to build that three-dimensional environment model of everything going on around the vehicle.

It requires a massive amount of computing to be able to interpret that data because generally the sensors are dumb sensors that are just capturing information. That information really needs to be interpreted. For example, a video camera is bringing in 30 frames per second (FPS), and each frame is basically a still picture. That picture is comprised of color values. There is a massive amount of computation required to be able to take those pixels and figure out, “is that a car?” or “is that a bicyclist?” or “where does the road curve?” It’s that type of computer vision coupled with deep neural network processing that will enable self-driving cars.

From a sensor fusion perspective, the ability to combine disparate types of information will improve reliability, but that adds extra levels of complexity. The more data that’s captured, for example from radar or lidar, the more processing that is required. There are these very dense point clouds that have to be interpreted, but once you can combine other types of data with the visual data from a camera, that will increase the reliability of a system.

Could you define “deep learning” in the context of vehicles?

SHAPIRO: Deep learning is a technique that’s been around for a while, and it’s not unique to automotive. Basically it’s a computer model of how the human brain functions, modeling neurons and synapses and collecting very disparate types of information and connecting them together.

There are really two parts to deep learning. The first is having a framework that you start from and then load with information. It’s very similar to how a child learns – you give them information and they form memories of that information and then build upon it. The first time you’re trying to teach a child about things related to driving you might give them a fire truck and teach them that it’s a fire truck, and they learn that. Then the next time you give them a tow truck, well they’ve learned the word “fire truck” so they call the tow truck a fire truck. So you correct them, tell them that it’s a tow truck, and then they’ve stored that piece of information and you just continue this model and expand upon that until eventually they can recognize an ambulance or other types of trucks and cars and things like that. The more information you give them, the more they know. It’s just like learning a language too. It’s the vocabulary that’s taught over time.

For deep learning we’re doing this in a supercomputer. So instead of talking about a few different trucks, we’re feeding the system hundreds of thousands of miles of video of these scenes, and the system is then able to analyze the frames of video and from a pixel level build up what it recognizes in those scenes and learn to understand what’s a pedestrian, what’s a bicyclist, what’s another vehicle, what’s the road, what are the street signs. Again, this is a process that can take weeks or months in a supercomputer up in the cloud, but once we’ve developed this neural network that explains how to interpret information coming in from the video cameras, we’re able to deploy that in a car and run it in real time. As the video comes in from the front-facing camera it’s analyzed at 30 FPS, and we can understand everything that’s in that frame of video. And, if we combine cameras from all around the vehicle, then we can start to understand what’s happening and track objects over time. We’re able to monitor all the moving pieces around our vehicle – other cars, other pedestrians, as well as fixed objects – to make sure that we’re able to plan a path safely through traffic and around parked cars or other potential obstacles.

For safety reasons and the fact that we need to have virtually zero latency, everything will be standalone for the core system. Now, these systems will need to be connected because they will communicate, and they will relay information back to the cloud as well as get information from the cloud, potentially weather information or traffic updates. But the concept of deep learning is that the training will take place offline and then a vehicle will get an over-the-air (OTA) software update with an improved neural.

There may be times during the training process that a car is driving and it detects something that it can’t classify. When that happens it’s going to record that information and then upload that back to the datacenter at the end of the day. So when you have a fleet of these vehicles driving around collecting information, they’re all going to share that to the cloud, a new neural net model will be created, and an update will be sent down. For example, suppose you had trained a car just on a dataset in a downtown, and when you took this vehicle out into the country it starts to see things that it hasn’t ever been trained for – maybe deer, or moose. The car would record the information there and then upload that to the datacenter. A data scientist then is involved in the training process and will add that to a dataset so that the next software update will enable deer detection or moose detection. This will be an iterative process whereby cars continue to get smarter and smarter through software updates.

In vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communications I think we’re quite a long way off from any kind of uniform data coming in. But when it exists and we have intersections or street signals that are conveying whether it’s a red light or a green light, that will just be an additional layer of data that the autonomous vehicle will be able to leverage.

What’s going to help bring these high levels of computation down to price points and form factors suitable for mainstream vehicles? Moore’s law, or is it something else?

SHAPIRO: There are two different things coming into play here that are going to take that trunk full of computers and bring it down to a very small, compact, efficient form factor. It’s not really about Moore’s law because Moore’s law is really slowing down. The way we’re combating Moore’s law is by increasing the parallel processing that takes place. So a GPU with its massively parallel capabilities will enable all of this data to be processed in real time. In addition, having an open platform that can be configured depending on the needs of the automaker will be key. That’s what we’ve done with our Drive PX solution, a highly parallel, very-high-performance supercomputer that’s in a small, energy efficient form factor that can run inside the vehicle. It’s been designed both as a development platform and as a system that can go into production. The combination of that hardware platform with a very robust development environment enables automakers to customize as they see fit. Each automaker has their own R&D efforts going on, their own teams that are working on different ways to solve these problems. The Drive PX platform is a solution that they can build upon. It’s not just a turnkey box in the car, but rather a solution automakers and suppliers can customize and use as a tool to differentiate their brand.

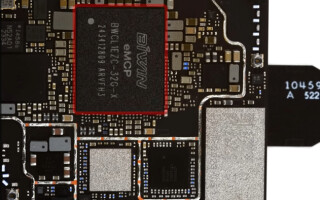

Drive PX features two of our Tegra X1 processors. Each of those is a 1 teraFLOPS processor, so 1 trillion floating-point operations per second. If we go back to the year 2000, the United States’ fastest supercomputer was a 1 teraFLOPS processor that took up 1400 square feet and consumed 500,000 W of power. This was a massive supercomputer in the year 2000, now it’s on a chip the size of your thumbnail. We’re able to deliver a massive amount of computational horsepower to run the algorithms to analyze those frames of video, but also to fuse the data together – those radar or lidar point clouds coming in – to build a very accurate, detailed map. But we can also fuse other data. If we have a high-definition map from a third party we can better understand where we are on that map, which is really critical for navigation purposes and staying in a lane, or knowing which lane we need to be in. Also, when V2V communication is out there we’ll be able to take that data and fuse it as well – it just becomes another source of information that will help us better understand and anticipate what else is happening around our vehicle.

Until we have fully autonomous vehicles, how is all of this information going to be displayed to drivers in a way that is digestible, useful, but still safe?

SHAPIRO: The key is to be able to have a very tight connection between the autonomous car and the driver, and be able to give the driver confidence that the car is sensing what it needs to sense. So the in-vehicle displays will need to manage the handoff from the driver to the car and then back to the driver, but also be able to showcase what the car is seeing and what the car’s intent is – we don’t want the car to do something that surprises the driver. Those kinds of systems are being worked on today, but there are both graphical as well as auditory ways of communicating.

Also, there will be opportunities to give the driver other information if they’re in autonomous mode, whether they’re reading their emails or in a conference call, doing a WebEx or video chat. Those systems will start to evolve, and we’ve seen some prototypes with concept cars where that’s happening, such as Audi and Volvo. The key thing is that the systems are part of the vehicle. If there is a situation where the driver needs to be alerted, that can be done through that in-vehicle system rather than if somebody is busy looking at a tablet or a smarthphone where there’s no connection to the vehicle. When that other entertainment or work experience is managed through the vehicle, it will be much safer.

What do you see coming in the next five to ten years in the evolution of active safety systems?

SHAPIRO: We’re well underway towards developing autonomous cars. We have prototypes on the road already, and you see a very active automotive community that’s developing on Drive PX. We have more than 50 different automakers, Tier 1s, and other research institutions using the platform, so we see autonomous driving sooner than many people expect. From commercial deployment we’re still a couple of years away, but the first phase of our work is coming to market in 2017 with Audi and their Traffic Jam Assist system based on our Tegra platform, and beyond that there will be numerous automakers that are going to be bringing our technology to market.

Longer term we’re going to see widespread adoption of autonomous cars, new business models, and ownership models. Over time we’ll see how that plays out, but we have traditional automakers and non-traditional companies that are getting into the transportation space that will leverage autonomous vehicle technology.

NVIDIA