Why Requirements Traceability Still Matters for Today’s Embedded Systems

February 10, 2023

Story

As the saying goes, the cost of “failing to build the right product or to build the product right” impacts revenue and reputation. The only way to build the “right product” is to develop requirements that are both effective and traceable down to the software. This enables development teams, quality assurance (QA), and certifying authorities to examine any function in the software to determine its purpose by tracing it back to a requirement.

The challenge lies in understanding how to maintain requirements traceability in the face of rapidly changing software driven by today’s dynamic market conditions and shorter release timelines. Understanding bidirectional traceability and knowing how to maintain it ensures product features are justified and, conversely, that nothing is built without a reason.

The Cost of Failure

There is an inevitable loss of sales if customers perceive the product functionality as compromised and potentially catastrophic revenue or reputational losses if there’s a recall or security breach. For example, Tesla recalled cars earlier this year due to a software error related to windscreen defrosting[1]. Among other Tesla recalls, many associated with autonomous driving, this exemplifies the complexity of managing all possible scenarios and the need to rapidly identify, fix, and update software to minimize costs and reputational damage.

“Challenges in Requirements Management,” an article by Deepanshu Natani on the Atlassian community, reads[2]:

“Analysts report that as many as 71 percent of software projects that fail do so because of poor requirements management, making it the single biggest reason for project failure – bigger than bad technology, missed deadlines, or change management fiascoes.”

Start by Writing Good Requirements

The foundation of every software project is its requirements. They should be precise and unambiguous, leading software development in a direction that is clear, testable, and traceable back to a specific purpose.

There are several approaches to writing requirements, with textual specifications being the most popular method. Text can be highly effective and include the use of layman’s language for broader understanding to technical jargon that is closer to the specific plans for implementation.

As human language is inherently imprecise and prone to ambiguity, a high degree of rigor is necessary to overcome the likely pitfalls. Applying proven rules to requirements helps avoid issues down the line – the MISRA coding standard offers an example of these rules[3]:

- Use paragraph formatting to distinguish requirements from non-requirement text

- List only one requirement per paragraph

- Use the verb “shall”

- Avoid “and” in a requirement and consider refactoring as multiple requirements

- Avoid conditions like “unless” or “only if” as they may lead to ambiguous interpretation

Figure 1 lists ten attributes of effective requirements.

.jpg)

[Figure 1. Ten attributes of effective requirements. (Source: LDRA)]

Ensure Proper requirements traceability

All requirements must be implemented. Equally, the reverse is also true – all source code (and all tests) should be traceable back to the design and ultimately to a requirement (either functional or non-functional).

The challenge comes when requirements start changing. To manage the impact of change quickly and effectively, relevant requirements need to be modified or new requirements added, and it’s important to understand how the change should be reflected in code and the tests necessary to verify the update.

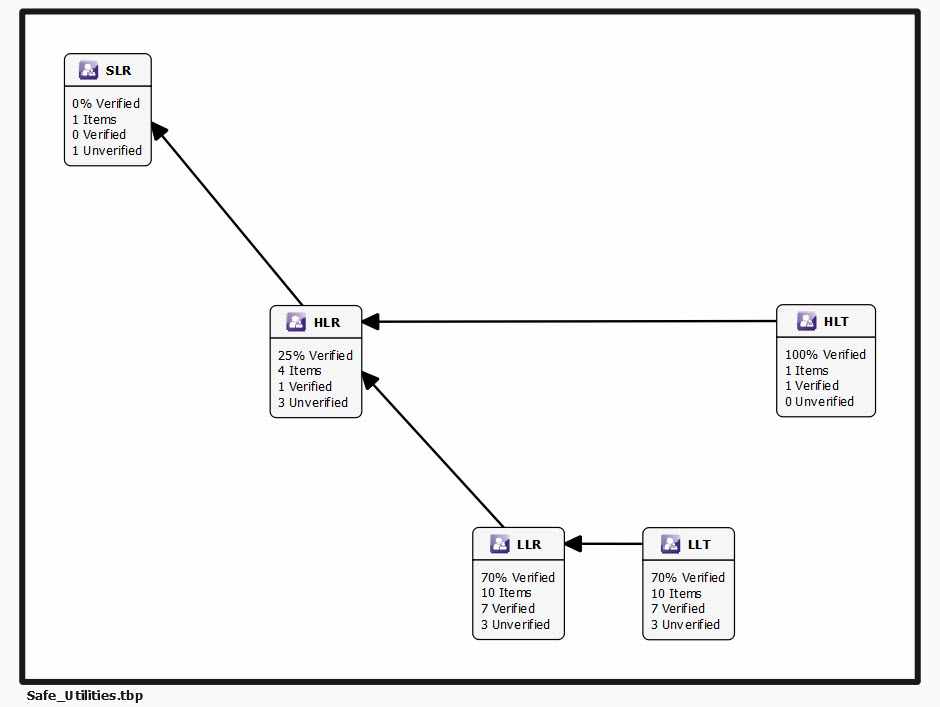

Figure 2 illustrates a typical relationship between requirements and tests[4]. Here, the system level requirements (SLR) should be traceable to high-level requirements (HLR), which in turn are traceable to low-level requirements (LLR). The HLRs are traceable to high-level tests (HLT) and the LLRs are traceable to low level tests (LLT).

This bidirectional traceability gives teams visibility from requirements specifications through building, testing, changes, and back again (Figure 2).

[Figure 2. A typical traceability structure between different types of requirements. (Source: LDRA)]

Managing changes without losing track of traceability means the coupling of requirements and tests must be automated – which also makes it simple to understand the upstream and downstream impacts of changes to tests and requirements.

Verify Requirements Fulfillment

Demonstrating the system fulfils requirements helps quantify the “right system was built.” There are two flavors of this:

- Using unit tests to show that application components meet their respective purposes in isolation

- Using integration tests to show that parts of the application work together as a whole

Automation and automated tools help here by linking unit and integration tests to their appropriate requirements and reporting on fulfillment without needing time-consuming manual effort. Figure 3 illustrates two scenarios within a requirements management platform[5]:

- HLR_100 – The green dot indicates that the requirement is fulfilled, reflecting the fact that the associated high-level test (TCI_HLT_100) and low-level requirements (LLR_104 to LLR_109) are verified.

- HLR_101 – The red dot indicates that the requirement is unfulfilled, reflecting the fact that low-level requirement LLR_103 is unfulfilled due to the failure of low-level test TCI_LLT_103.

[Figure 3. Reporting on requirements fulfillment using an automated tool. (Source: LDRA)]

In the latter scenario, the failed test has an associated test case file that can be regressed using a unit testing tool and an associated regression report to help understand why the test failed.

Determine Structural Coverage

Structural coverage is an important concept in embedded software because it provides assurance that the whole code base has been exercised to a consistent and adequate extent. As a key guideline in standards like ISO 26262, DO-178B, and IEC 62304, structural coverage helps developers detect and remove dead code while quality assurance (QA) uses it to determine missing test cases. In both scenarios, tracing this coverage back to requirements helps ensure there’s a reason for every implemented component and identify requirements that haven’t been implemented.

Automation helps determine structural coverage, showing which parts of the code are exercised as requirements-based tests are completed[6]. Figure 4 illustrates a test verification report showing the results of different types of coverage metrics:

[Figure 4. Reporting on structural coverage using an automated tool (Source: LDRA)]

- Statement – The number of statements exercised in the execution of an application, as a percentage of the total number of statements in that application. 100 percent coverage implies that all statements have been executed at least once during the test.

- Branch/Decision – The number of branches exercised in the execution of an application as a percentage of the total number of statements in that application.

- MC/DC – Modified Condition/Decision Coverage (MC/DC) measures whether all conditions within decision statements are evaluated to all possible outcomes at least once and that all those conditions independently affect the decision’s outcome.

Figure 5 shows a mapping between these tests and their associated low-level requirements to form a traceability matrix. In this example, all requirements (LLR_*) have been verified using a test (TCI_LLT_*). This type of report is only possible through the application of the traceability principles discussed here.

[Figure 5. A traceability matrix showing verification of requirements as mapped to test cases. (Source: LDRA)]

Traceability Ensures the “Right” Product is Built

Teams must never relegate system and software requirements to shelf-ware. As products grow more complex and software updates increase in frequency, requirements traceability remains relevant and necessary to minimize risks.

Knowing how and where a requirement is implemented and tested ensures software is fit for purpose. Maintaining this awareness across software changes guarantees developers know the impacts to code and tests without spending time searching for them.

Automation is the only realistic method to dynamically maintain bidirectional requirements traceability and ensure the product is “built right.” Without it, teams would spend too much time trying to figure it out manually, leading to increased costs and potential issues downstream.

Mark Pitchford is a technical specialist at LDRA Software Technology and has over 30 years’ experience in software development for engineering applications. Since 2001, he has helped development teams looking to achieve compliant software development in safety- and security-critical environments, working with standards such as DO-178, IEC 61508, ISO 26262, IIRA and RAMI 4.0. Mark earned his Bachelor of Science degree at Nottingham Trent University.

LDRA

LinkedIn: www.linkedin.com/company/ldra-limited

YouTube: www.youtube.com/user/ldraltd

References:

- Korzeniewski, J. (2022, February 9). Tesla Recalls 27,000 Cars Over Windshield Defrosting Problem. Autoblog. Retrieved February 9, 2023, from https://www.autoblog.com/2022/02/09/tesla-recall-heat-defrost-software-update.

- Natani, D. (2020, September 27). Challenges in Requirements Management. Atlassian Community. Retrieved February 9, 2023, from https://community.atlassian.com/t5/Jira-articles/Challenges-in-Requirements-Management/ba-p/1474790

- MISRA.org. MISRA. (n.d.). Retrieved February 9, 2023, from https://www.misra.org.uk.

- 4. Requirements Traceability. LDRA. (2022, April 19). Retrieved February 9, 2023, from https://ldra.com/capabilities/requirements-traceability.

- 5. TBmanager. LDRA. (2022, February 9). Retrieved February 9, 2023, from https://ldra.com/products/tbmanager.

- 6. Structural (Code) Coverage Analysis in Embedded Systems. LDRA. (2022, November 17). Retrieved February 9, 2023, from https://ldra.com/capabilities/code-coverage-analysis.