When Standards Mature: Balancing Performance & Demand in the High-Energy Physics Community

September 03, 2021

Story

The high-energy physics community leverages PICMG’s MicroTCA.4 hardware standard for timing and synchronization, data acquisition, and control at particle accelerators like DESY. But as experiments at these research centers advance, the performance of supporting systems must evolve. How can standards like MicroTCA support the demands of bleeding-edge use cases and remain applicable to broader markets?

The DESY research center in Hamburg is home to circular accelerators like PETRA and the world’s longest linear particle accelerator, the 2.1-mile XFEL. The systems are used in physics experiments that study quantum particles, film chemical reactions, map the structure of viruses like COVID-19, and many others.

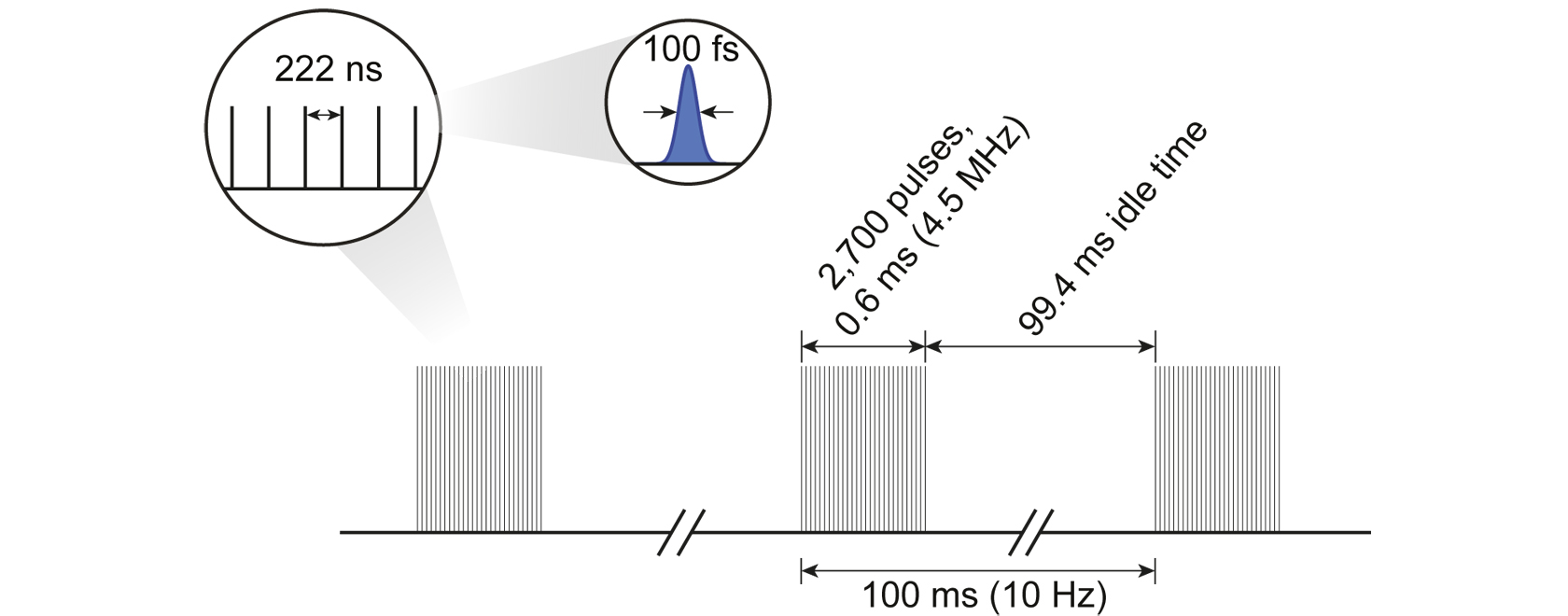

To say the electronics that support these accelerators are sophisticated is an understatement. For example, 10 times every second, the XFEL generates 2,700 X-ray pulses at a repetition rate of 4.5 MHz (Figure 1). That means that every second the laser emits 27,000 pulses with a luminosity that is multiple orders of magnitude greater than conventional X-rays. Imaging detectors capture 10 GBps of data from these blasts in support of experiments that measure atomic-level wavelengths as infinitesimal as 0.05 nm, and require femtosecond resolution.

[Figure 1. The XFEL X-ray generates 2,700 X-ray pulses at a 4.5 MHz repetition rate 10 times per second.]

The control system that manages XFEL, Karabo, was developed in-house by DESY engineers. But the fast electronics behind Karabo’s sensitive timing, measurement, and front-end data acquisition platforms are an open industry standard: MicroTCA.4 (µTCA.4).

“XFEL is completely controlled by µTCA – all the fast electronics,” said Kay Rehlich, the engineer responsible for standardizing DESY’s accelerator control systems around µTCA. “There are a lot of other systems involved for slower stuff, but the fast dynamic beam things like high-precision timing, machine protection, diagnostics, and RF — all of this is done in µTCA.”

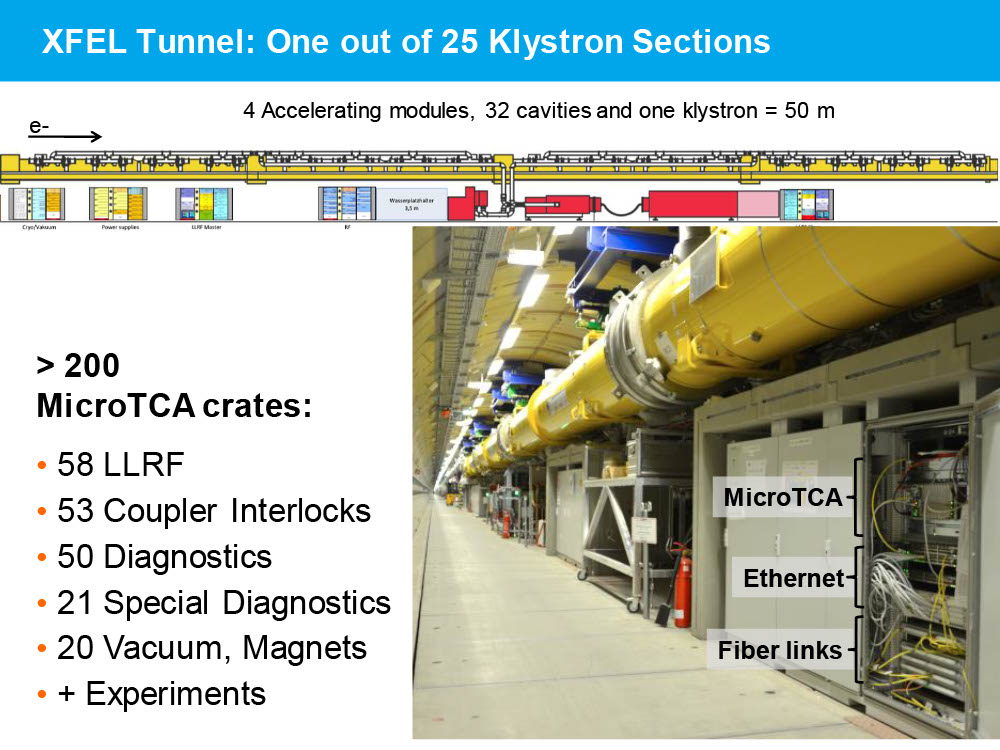

There are more than 35 µTCA.4 systems distributed along the XFEL’s miles-long expanse (Figure 2). Originally designed as a telecom-grade solution, the µTCA platforms operate 24/7 for periods of a year or more thanks to integrated reliability features like remote monitoring and control, automatic failure detection, and redundant cooling and power supplies.

[Figure 2. More than 35 MicroTCA.4 systemsare distributed along the XFEL accelerator’s2.1 miles to perform synchronization, diagnostics, and other control tasks.]

The µTCA chassis accept plug-in cards called AdvancedMCs (AMCs) that support a range of modular functionality. At DESY, the AMCs host FPGAs that are used to digitize, synchronize, and process data. Data is then sent over high-speed PCIe and Ethernet interconnects across the backplane and out of the system to control servers and other equipment.

For the more specific needs of high-energy physics applications, the .4 extension also supports a special clock and trigger topology. In the XFEL timing system, this provides reference frequencies to align sampling rates and deterministically distribute the X-ray laser’s pulse pattern information.

Rear-transition modules (RTMs), which are not present on the µTCA base specification, plug into the back of the chassis opposite AMCs to support the additional I/O requirements of physics applications (Figure 3).

[Figure 3. The MicroTCA.4 extension specification adds physics-centric features like rear-transition modules (RTMs) that support additional I/O.]

MicroTCA, Meet Modern Requirements

Despite the system’s sophistication, time marches on, and the XFEL was designed and implemented more than 10 years ago. This includes the imaging detectors, which photograph the accelerator’s 27,000 X-ray pulses per second.

“The systems driving the most data are the large 2D image detectors, which basically take X-ray images,” says Dr. Patrick Gessler, Head of the Electronic and Electrical Engineering Group who works on the accelerators at the European XFEL. “The detectors can only take between 350 and 800 images in one go, but they are still producing around 10 GBps of data.

“Already we cannot visualize hundreds of images that are generated in the 10 hertz cycles,” Gessler explains. “Now new 3D technologies are coming into reach. This means naturally there will be more and more data generated we have to cope with.

“There are also different kinds of detectors, called 0D detectors, which are just ADCs or digitizers with multiple channels,” he continues. “Right now, we have digitizer systems going up to 10 GSps, but the experimenters would like to go even beyond this and also have higher vertical resolution on many, many channels.”

Data isn’t being collected in the µTCA chassis, just digitized and transmitted to hosting servers where scientists from around the world can analyze it. But µTCA is a data pipe in the end-to-end system – one that could potentially burst when DESY installs next-generation detectors that may require TBps transfer speeds.

“We are using a CPU in the µTCA system to control the AMCs, but also to get the data off them. We do some pre-processing and then send it off, usually via 10 GbE if it’s data or 1 GbE into the control system,” Gessler continues. “This means the bottleneck right now is the 10 GbE, as it’s the highest speed we have going out of the µTCA crate.”

When Standards Aren’t So Simple

Revision 2.0 of the µTCA base specification currently supports 40GBASE-KR4 to deliver peak 40 Gbps data transfers across the backplane. But 40GBASE-KR4 is just the aggregate of four 10GBASE-KR lanes, so it doesn’t practically improve bandwidth from a port density perspective.

That doesn’t mean there aren’t options. For instance, Intel finally released PCIe Gen 4 support on its 11th generation chipsets earlier this year, which offers up to 32 GBps data transfers over 16 lanes. And 25GBASE-T lanes have been combined to form 100 GbE AdvancedTCA systems, the big brother of µTCA, for more than five years. Neither of those technologies are state-of-the-art when it comes to electrical backplane technology:

- The PCIe Gen 5 spec was finalized in 2019

- The IEEE standardized 100 Gbps Ethernet over copper traces in 2014

- PAM4 signaling is now commercially available in interconnect solutions capable of carrying PCIe or Ethernet signals at 56 Gbps and 112 Gbps data rates.

According to Rehlich, DESY and its partners are currently running simulations and are “quite certain that we can do 100 GbE using 4x 25 Gbps lanes and PCIe Gen 4.” On the surface, this would provide some amount of bandwidth relief without completely overhauling the µTCA standard. But that’s just the surface.

“If you want faster communications, either PCI Express Gen 4 or 5 or even 100G Ethernet, you need switches in the crate that control all of the µTCA communication,” Rehlich explains. “Those would consume more power than what we can provide now in the defined 80 W per AMC slot.”

Engineers at the research center are considering doubling the total µTCA system power consumption to 2 kW. This would not only enable network switching, but also the use of high-performance FPGA and GPU computing solutions that could be used to perform AI.

However, this is where things begin to unravel. The first issue is that crosstalk generated by these higher performance compute and connectivity solutions would negatively impact the more sensitive onboard electronics.

“I'm not sure how much processing and extremely-high-speed systems are valuable in the µTCA crate if we also want to use it for very sensitive signals,” Gessler says. “We have digitizers directly in the crate that accept sensitive low-voltage analog signals. The risk could be, if you combine very powerful computational systems with very high speed and a lot of very sensitive signals on a very compact chassis, you might end up compromising either one or the other, right?”

Should all of this be in the same system? Going further, is a standard even the right option for this use case?

When Standards Mature: Balancing Market Demand

The situation at DESY is one of the few disadvantages of an industry standard, which is the need to reach some level of consensus. Generally speaking, what drives consensus in an industry standard is the market. Butt whose market?

The µTCA standard serves markets with longer lifecycle requirements like industrial controls, network infrastructure, and test and measurement. The longer deployment cycles mean that fewer sockets are ready to be upgraded in a given period of time, so it takes longer for critical mass to grow around certain technical requirements.

At the same time, the standard, like many other board- and system-level standards, was designed with the x86 architecture in mind. As mentioned, Intel’s 11th generation processors are the first to support PCIe Gen 4, which marks ten years since the introduction of PCIe Gen 3 on Intel server (2nd generation Sandy Bridge) and desktop (3rd generation Ivy Bridge) chipsets.

Still, it’s only a matter of time before all these applications and chipsets advance to current state-of-the-art technologies like PCIe Gen 5 and 100+ GbE speeds over some type of backplane. If a standard like µTCA is to continue, something like high-energy physics must drive it to those performance levels.

Jan Marjonovic is a Senior FPGA Developer at the MicroTCA Tech Lab at DESY, a division of the institute that aims to “find new use cases for the MicroTCA and be a service provider to other institutes and companies.” From DESY’s perspective, Marjonovic said, the objective is “to extend the installation base, extend the user base, and then find help with the community.”

While the high-energy physics market is one of the most active µTCA communities, it is a smaller one in terms of volume of units shipped. That being said, their total investment in particle accelerators, quantum computing instrumentation, atomic fusion and fission equipment, and so on is many billions of dollars, and using a standard like µTCA helps protect those investments.

That is, if a standard like µTCA can continue supporting their needs.

“µTCA.4 was always a niche product, and it will remain a niche product,” Marjonovic says. “But if DESY was the only one using µTCA, this would not be a standard. There are at least 20 or 30 institutes already using it and when you go to the workshops there are a lot of people there.

“There is, at minimum, a market that is large enough to sustain technology suppliers that cater to the physics market. That’s already the first milestone,” he continues. “The physics community on its own needs standards so that companies can collaborate and build together.”

Can Accelerators Accelerate a Standard?

µTCA technology suppliers like VadaTech, N.A.T. Europe, Samtec, and others are all actively involved in the previously mentioned full-channel simulations to determine the viability of more power and higher-speed interconnects in µTCA systems. Of course, running tests and implementing a new business, engineering, and manufacturing strategy are two different things. That’s especially true if you’re waiting for the market to catch up to the technology. It’s even more true when you’re dealing with a standard that is designed to support interoperability and, to an extent, backward compatibility.

DESY engineers and other members of the physics community understand this and have a vested interest in maintaining it. After all, Rehlich points out that “one of the reasons µTCA was selected is so that all the very different subsystems can use the same standard, which eases software development. If you have a unified, standardized system you can also standardize your software and firmware much better than if you have a heterogeneous system.”

But how and when to move forward are the questions that must be answered when standards mature and the market is confronted with balancing performance and demand.

“Worst case, we make an intermediate step,” Rehlich says. “We can do 4x 25 GbE and PCIe Gen 4 while we make all of the definitions and define the protocols for an updated µTCA.4 spec. We can work that out this year.

“We want to keep this as a viable standard, so we have to follow what the technology is doing and the CPUs these days. Intel CPUs provide PCI Express Gen 4, so a crate should be able to do that,” he explained. “FPGAs are higher power and higher performance now, so I think the standard has to follow.

“This is not the time to make a completely new standard,” the control system veteran continued. “When we have optical communication on the backplane, that’s a good point in time to generate a completely new standard, but that kind of technology is not yet available. So, I think we have to keep with the standards we have to make sure people do not lose their investment in the technology, all the electronics they bought, and the knowledge, of course.”