Advanced Process Nodes Make Emulation Essential

August 27, 2018

Blog

The semiconductor industry embodies a relentless march from today?s processes, which have small features, to future process nodes, which will involve features sizes that might seem crazy today.

This is part three of a series. Read part two here.

The semiconductor industry embodies a relentless march from today’s processes, which have small features, to future process nodes, which will involve features sizes that might seem crazy today. Then again, today’s features probably seem ridiculously small when viewed by someone working in the 1980s.

New process nodes with smaller features enable more transistors on a chip; it has always been so. Verification tools of all types – including emulation – have required constant upgrades to keep up with the capacity needed to test out the most advanced chips.

But with the latest developments, we’ve crossed a line. It’s no longer just a capacity game: verification that used to be someone else’s problem may now be your problem, and emulators, in particular, have had to step up to support new verification tasks.

Bringing it All Together

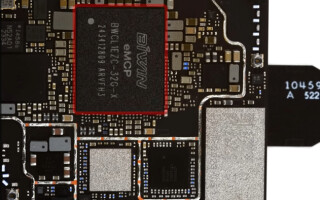

What’s changed is that separate components of a computing platform – blocks that used to reside on separate chips – can now be integrated together into a broad range of general and specialized systems-on-chip (SoCs). Not only must all blocks be verified, but their interactions must as well.

Your typical SoC integrates one or more processors with a wide range of supporting IP blocks. The most obvious task, given that we now have a complete computing engine, including memory, is to verify the SoC while software is running on individual processors – or even while multiple processors interoperate.

Without emulation or hardware-assisted verification, such software verification is completely impractical; software takes far too long to execute using simulation. Simulation runs at speeds on the order of 1 Hz; simply booting Linux takes millions of cycles. At 1 Hz, 1 million cycles would take over 11 days to complete – meaning that simply booting the system to a point where it can start running application software could take weeks – with additional weeks for checking out the rest of the software. It’s just not going to happen.

So, the first thing that emulation provides is the speed necessary for checking out software. But that’s just the start. Speed has had to make room for other important parameters – with none more important than power. With both the current emphasis on increasing the battery life of handheld devices and an overall interest in drawing less power even from wall plugs, power must now be thoroughly vetted.

Two trends impact power testing. The first is the proliferation of different modes. If you’re building an SoC for a mobile device, for example, you’ve got modes for checking the internet, watching videos, listening to music, getting driving directions, sending texts, and – oh yeah – making phone calls. All combinations and permutations should ideally be tested to make sure that no high-power corner case lurks undetected.

The second aspect of power testing involves monitoring how power responds while software executes. A broad range of software scenarios must be tested to ensure that critical functions don’t unexpectedly drain a battery faster than expected.

With SoCs that execute a broad range of software that may be unknown at design time – like laptop computers and smartphones – lower-level drivers and other infrastructure software may be the focus of your verification. But for purpose-built devices – especially those intended for the Internet of Things – you will also want to check out the dedicated software operating at the application level. Only emulation makes that possible within the context of the combined hardware and software.

Stacks and Stacks of Verification

Your system is also likely to contain a variety of stacks supporting communications and security. Communication stacks typically involve a combination of hardware and software. The lowest layers are be cast into transistors, while the upper layers are implemented in software. All layers must interact with their neighbors, whether that means staying within hardware, staying within software, or moving back and forth between hardware and software layers.

While we’ve been verifying communications for a long time, security has been elevated from, “We should think about this sometime,” to, “We’ve got to have this!” And it’s a new field for most engineers, having been the domain of only a few expert specialists in the past.

Security may or may not also involve a combination of hardware and software. Low-level IP for compute-intensive functions like encryption and hashing can be implemented in hardware – or, where performance and power permit (but price-points don’t), those same algorithms can run in software.

Security stacks intersect with the communication stacks. If your communication setup involves, say, Transport Layer Security (TLS), then verification of your communication stack will necessarily include some security verification. You’ll need to be sure that authentication is working properly, that keys are stowed and sent as needed, and that encryption – both of keys during setup and of content for transmission – is thoroughly tested using emulation.

No Longer Someone Else’s Problem

Security, communications, video processing, audio processing – and so many more functions that our most advanced processes enable are now together on a single chip. Each of these used to be handled by specialists in their own silos. No more; you own it, even if you have the support of specialists along the way. A single verification plan needs to make sure not only that each of these areas works correctly and with the necessary performance and power, but, more importantly, that interactions and handoffs between these functions happen smoothly and efficiently.

As more advanced process nodes materialize, it’s hard to predict what you’ll specifically have to test on them. But we know that it will involve ever-increasing numbers of transistors and lines of software code. That means you need an emulator family that has the legs to take you forward even beyond the foreseeable future.

Designs at advanced process nodes are increasing rapidly size in terms of transistor count and in complexity. Because of this, the Veloce Strato emulation platform is scalable to 15 billion gates. That’s far more capacity than most people can envision today, but, even before we need that many gates, it simply means that you don’t have to wonder whether or not you’ll be able to verify any imaginable chip. You can simply know that you’ll be covered – for at least the next several years, and likely well beyond that.

Jean-Marie Brunet, Sr. Director of Marketing, Mentor, a Siemens Business

Jean-Marie Brunet is the senior marketing director for the Emulation Division at Mentor, a Siemens Business. He has served for over 20 years in application engineering, marketing and management roles in the EDA industry, and has held IC design and design management positions at STMicroelectronics, Cadence, and Micron among others. Jean-Marie holds a master’s degree in Electrical Engineering from I.S.E.N Electronic Engineering School in Lille, France. Jean-Marie Brunet can be reached at [email protected]

Mentor, A Siemens Business