Successfully addressing PCIe protocol validation challenges

October 08, 2015

PCIe's layered protocol brings about different challenges, and thus, different solutions. Key concerns for PCI Express (PCIe) validation teams are int...

PCIe’s layered protocol brings about different challenges,

and thus, different solutions.

Key concerns for PCI Express (PCIe) validation teams are

interoperability and backward compatibility with previous PCIe generations.

This requires tools to validate the parametric and protocol aspects of designs

to ensure compliance, and to validate design performance. The layered nature of

PCIe drives different test challenges and solutions depending on the area of

focus.

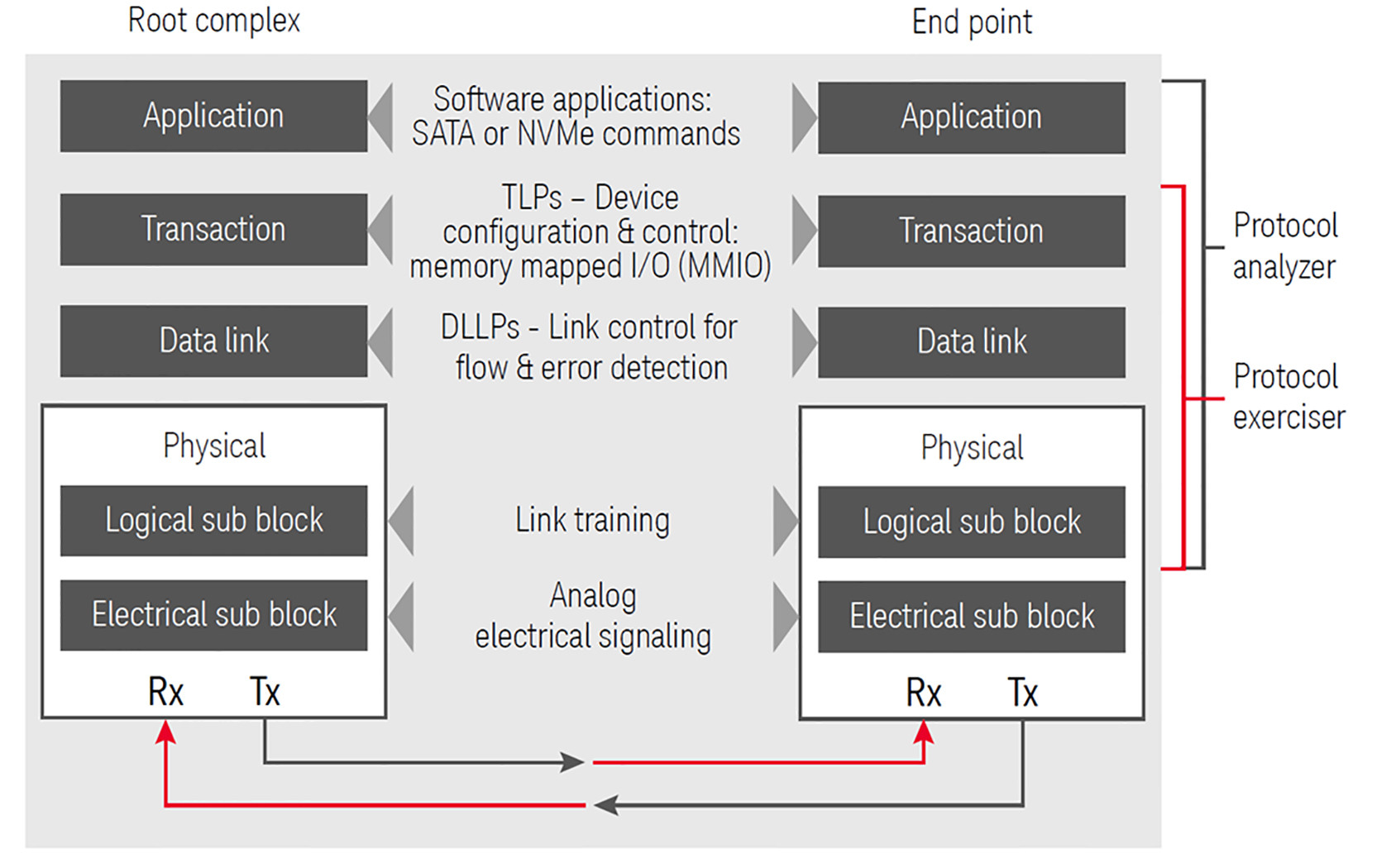

PCIe technology is a layered protocol, as shown in the Figure

1:

different types of tests.

Protocol-level validation challenges

Once users achieve a data valid

state at the physical-layer level, which requires validation of link signaling

and LTSSM operations, they test the higher layers at the protocol level with a

protocol analyzer and exerciser. The analyzer connects to the link between the

two devices in an active state to observe and evaluate data at each level. The

exerciser is connected as an end device to emulate stressed conditions and

record how the DUT responds. Validation of the PCIe data link layer is performed

by the specification tests that check for data link layer protocol packets

(DLLPs) being transferred, acknowledgements, negative acknowledgements, retransmissions,

and flow control. Validation teams need robust systems that can recover from

all errors, including intermittent failures.

The primary challenge of protocol

validation is to test system functionality with speed and accuracy so that the product

can go to market. Protocol errors must be detected, analyzed, and corrected in

an efficient manner.

Debugging PCIe protocol means

capturing at-speed traffic, including power management transitions. Protocol

debug tools need to lock onto traffic quickly, then trigger on a unique protocol

sequence. Debugging lower-level problems, such as power management, requires

exceptionally fast lock times. Once traffic is captured, viewing the data at

different levels of abstraction makes it possible to isolate the problem.

Another key challenge that occurs

within PCIe protocol debug is gaining access to the signals in a non-invasive

manner. The probe connected to the PCIe bus must not disturb or change the signals

in a manner that impacts bus values or operation. Where and how signals are

probed depends on each unique system design. Mid-bus probes provide access to

traffic, but must not impact signal quality. Slot interposers need to pass

signals passively over a long trace and not change the signals. Validation

teams require flexible access with multiple probing options.

With the arrival of PCIe 4.0,

increasing speeds to 16 GTps will continue some of the same trends seen in PCIe

3.0 at 8 GTps, requiring even greater signal quality tuning and equalization capabilities

to achieve validation. The NVMe protocol presents many new test challenges.

NVMe creates a new high-performance scalable host controller interface for

PCIe-connected devices that maps memory from the host machine for moving data

to and from the storage device, with low latency and end-to-end data

protection. The interface utilizes multiple queues to manage and provide optimized

command submission and completion paths and supports parallel operation.

Protocol validation test setup

The ultimate goal of validation with the analyzer is testing

the link and data transmission to assure device functionality and interoperability.

To achieve this, the protocol analyzer needs to provide visibility into what

happens at each unique protocol layer. When troubleshooting, users must track

specific errors to the appropriate layer for correction.

To validate the device, the protocol exerciser needs the

ability to emulate either a root complex or an end point in the same card. The

exerciser acts as an ideal link partner by sending appropriate I/O traffic to

stimulate the device under test. Then, error-recovery processes of the DUT can

be validated by simulating various conditions and scenarios without influencing

its performance parameters.

Throughout these functions and tests, users must address the

complexities of how and where signal access happens with consistent

representation for accurate data recovery. The probing solution needs to be

high-performance and versatile to accommodate different types of system

designs. Probing needs to remain non-intrusive, so that access won’t impact

signal quality or protocol operation.

Each of the below functions needs to be tested with both an

exerciser and an analyzer to ensure that the data link and transaction layers

are functional and compliant:

Equalization (EQ) is the process of training the two terminations

to reliably transfer each bit. Each individual lane must be trained. Within a by-4

link, each link can have different tuning parameters. Training is dynamic and

requires that each lane’s pre-cursor, cursor, and post-cursor values are set

correctly. Signal quality is critical for successful operation. The EQ process

is critical to a successful link at PCIe 3.0 8 GTps speed and is even more

important for PCIe 4.0 at 16 GTps.

Link Training Signaling State Machine (LTSSM) analysis

observes and controls the link’s different possible states. State transitions

handle link up, recovery, and power management. The goal of LTSSM is to reach a

state called L0 – where the link is active and data can be transferred.

Other states within LTSSM, such as configuration, training, and error-recovery

routines, provide link control and recovery as well as power-management capabilities.

Packet capture looks at the individual PCIe packets to decode

the responses and device configuration and enumeration. The process of reading

and writing from memory is based on addresses contained in the packets to

transfer data across the link.

Performance analysis and flow control affects response times

and overall throughput. Flow control is used to prevent buffer overflows and

can clearly identify credit starvation issues that can negatively impact data

throughput.

After all tests are met to establish a stable communication

channel, the next step is to validate the application layer (e.g., NVMe) over

the PCIe bus. For NVMe protocol testing, users will require a tool to observe

how the different parts interact. The data link layer, acknowledgements, flow

control, multiple queues, and multiple commands need to be coordinated. There

may be thousands of packets to analyze in one NVMe read. A good tool will

summarize the communication and assist in driving into the details that needed

to resolve any operational issues. A protocol analyzer will continue to be a valuable

tool for users to validate designs as the NVMe specifications evolve.

Emulation is another important aspect of NVMe testing. Users

need the ability to create multiple queues and commands in operation to ensure that

a controller can manage the different types of configurations. The companion tool

for this sort of emulation process is called an NVMe exerciser. These tools can

execute validation test sequences that identify common implementation errors.

The ultimate goals for validating a device to PCI-SIG standards

are interoperability, reliability, backwards compatibility to previous PCIe standards,

and speed to market. Protocol-level testing requires tools designed with

flexibility, not just for the current generation’s challenges, but ideally with

built-in capabilities that anticipate the evolution of these layers in future

versions of specifications. Whether it’s PCIe or NVMe-based, the right set of

comprehensive test solutions will continue to help validation teams overcome

the challenges of validation with the tools and support they need for fast and

accurate testing.

- The physical layer is the physical link for information transfer. There’s an electrical sub-block for analog signaling to send data, and a logical sub-block, which manages equalization training (both from the receiver and the transmitter) to set up specific parameters for achieving a clean signal operating across the link.

- The data link layer, above the physical layer, manages link control. Most acknowledgements, retransmissions and error detection/correction takes place here. The data link also contains a flow control, which ensures that the other end is ready to receive data.

- The transaction layer includes configuration and control for moving data across the link and its memory-mapped I/O (MMIO), allowing data packets to read and write directly to memory. This layer also includes TLPs (transaction layer protocol packets), such as memory read/write data.

- Finally, storage software operations originate in the application layer, which is where protocols like NVMe exist as an application for storage management. Packaged within the TLPs are the NVMe application commands, which focus on storing and retrieving the data.