Multi-Thread AI Processor Features Low Power Supercomputing

March 04, 2022

Blog

Working with AI models can be highly demanding in terms of computational resources — with more processing power comes greater power requirements. To solve this problem, Ceremorphic developed the ThreadArch multi-thread processor technology.

Ceremorphic has developed a novel architecture to support next-generation applications such as AI model training, HPC, drug discovery, metaverse processing, and more. Its architecture, designed on 5 nm processor and tuned to modern silicon geometry, addresses high-performance computing demands in terms of dependability, security, and power consumption at scale.

To service performance-demanding market areas, the chip architecture is designed to meet today's high-performance computing concerns in terms of reliability, security, and energy consumption.

At the heart of the chip is analog circuitry laying beneath digital circuits. The key features of the Hierarchical Learning Processors (HLP) include a custom 2GHz Machine Learning Processor (MLP) and a custom 2GHz FPU. ThreadArch, the patented multi-thread processing macro-architecture, is based on a RISC-V processor for proxy processing and operates on 1GHz. It also includes a custom 1GHz video engine for metaverse processing, along with an M55 v1 ARM core for low power and efficient performance, and features a custom-designed X16 PCle6.0/CXL 3.0 connectivity interface. Ceremorphic claims a soft error rate of 100,000-1 with this technology.

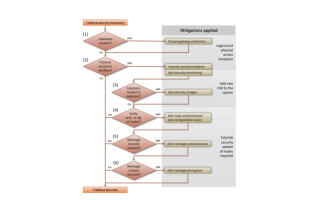

According to Ceremorphic's plans, the chips would utilize the host system's CPU power to provide computing power, and then Ceremorphic would accelerate the training portion of the procedures. The architecture would provide defense against quantum-enabled system assaults.

With such specifications and over 100 patented technologies, the Ceremorphic HLP Multi-thread chip features supercomputing abilities while consuming a considerably low amount of power. The HLP ensures optimum energy efficiency by using the appropriate processing system for the best power performance. The technology has not been tested yet, but the patented technologies might assure the objectives of theoretical performance.

Making Supercomputing Abilities Available at Super-Low Power Consumption

The ThreadArch multi-thread processor offers all the advantages of a high-performance chip while consuming low power. It also offers OpenAI framework software support with optimized compiler and application libraries. Although there are no practical tests to verify the performance yet, Ceremorphic is certain the performance to be up to par.