The Benefits of Containerization for Embedded Systems

February 23, 2023

Blog

Containers are an evolutionary standardized and portable packaging technology. They were first introduced for web applications and microservices development and deployment, gaining wide adoption in the IT industry.

Today, we also see them being adopted in the embedded industry, for example, in the development of automotive electronic control units (ECUs). This is for both adaptive AUTOSAR and classic AUTOSAR meant for deeply embedded safety- and security-critical systems where C and C++ are the dominant programming languages.

Containers offer a quick reproduction of identical environments for embedded development, testing, staging, and production at any stage of software development, which increases overall productivity, code quality, reduction in labor, cost savings, and more.

With containers, organizations and their suppliers have found amazing levels of agility, flexibility, and reliability. Companies use containers to:

- Improve software development time to market.

- Improve code quality interest.

- Address challenges in managing the growing complexity of development ecosystems.

- Dynamically respond to the software delivery trials in a fast and continuously evolving market.

An example in use is how containers get deployed right into today’s modern Agile development workflows like DevOps/DevSecOps.

Before getting into the details and the benefits, let’s put this technology in context and answer the following questions.

- Why do containers exist and what are they?

- How do containers fit within the software development life cycle?

- How do they affect business outcomes?

Container Technology

The Open Container Initiative (OCI) is a Linux Foundation project established in 2015 by various companies for the purpose of creating an open industry standard around container formats and runtimes. The standard allows a compliant container to be seamlessly portable across all major operating systems, hardware, CPU architectures, public/private clouds, and more.

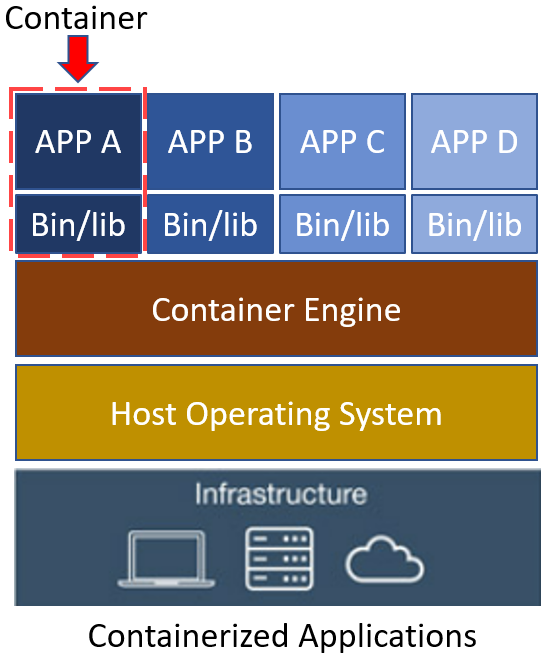

A container is an application bundled with other components or dependencies like binaries or specific language runtime libraries and configuration files. Containers have their own processes, network interfaces, and mounts. They're isolated from each other and run on top of a “container engine” for easy portability and flexibility.

In addition, containers share an operating system. They can run on any of the following.

- Linux, Windows, and Mac operating systems

- Virtual machines or physical servers

- A developer's machine or in data centers on premises

- The public cloud

Figure 1: Containerization architecture

It’s important to understand the role of the container engine, since it provides crucial functionalities like:

- Operating system level virtualization.

- Container runtime to manage the container’s life cycle (execution, supervision, image transfer, storage, and network attachments).

- Kernel namespaces to isolate resources.

There are also various container engines, including: Docker, runC, CoreOS rkt, LXD, CRI-O, Podman, Containerd, Microsoft Hyper-V, LXC, Google Container Engine (GKE), Amazon Elastic Container Service (ECS), and more.

Other concepts worth mentioning at a glance are container images and container orchestration.

A container image is a static file with executable code and includes everything a container needs to run. Therefore, a container is a running instance of a container image. Additionally, in large and complex deployments, there can be many containers within a containerization architecture. Managing the life cycle of all the containers becomes especially important.

Container orchestration manages the workload and services by provisioning, deploying, scaling up or down, and more. Popular container orchestration solutions are Kubernetes, Docker swarm and Marathon.

Let’s now consider the technical and business gains from the flexibility of having your applications packaged up with all their dependencies so that they run quickly and reliably from one computing environment to another.

Technical and Business Gains

The development ecosystem of embedded software systems can be overly complex. Having large teams all work within a common or identical environment compounds the complexity. For example, development team environments consist of compilers, SDKs, libraries, IDEs, and, in some cases, the incorporation of modern technologies like artificial intelligence (AI) and much more. All of these tools and solutions are working together, as are all of their dependencies, plus the always evolving release versions that provide fixes to discovered security vulnerabilities, fixes to identified flaws, licensing, and much more.

Additionally, organizations should have separate environments for development, testing/validation, production, and perhaps for disaster recovery. With containers, organization can effectively manage all these complex development environments by easily scaling up or down application dependencies, reverting the development environment to a specific state, and rolling out container images as needed, ensuring every team member gets a consistent development environment. Today, many organizations replicate the development and test environments on each developer/tester machine, leaving room for human error.

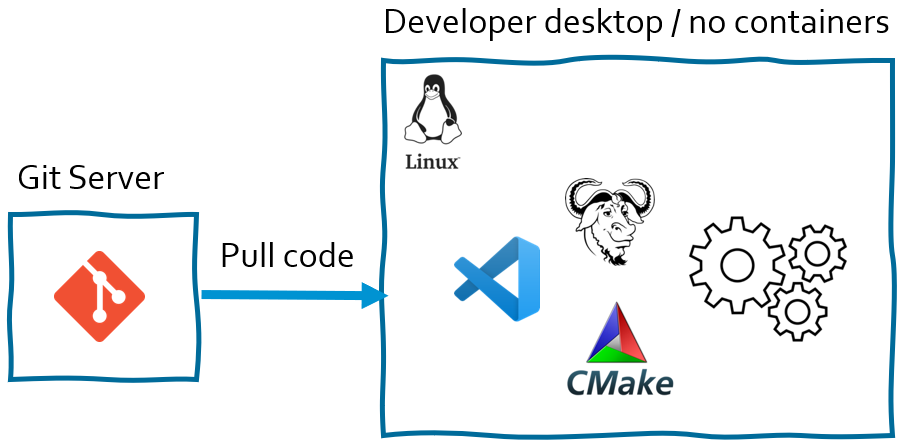

Figure 2: Sample build and run on developer’s machine with no use of containers.

I recall a time when I had developed and tested an embedded application on my desktop and it worked perfectly, so I committed the code. Much later, I was informed by the QA team during acceptance testing that the application did not work. I started debugging the problem reported but could not produce the issue described.

I brought other development team members into the fold to help identify and resolve the issue, but we just could not reproduce the problem. After days of investigating, collaborating with the QA team, and at times grasping at straws, we finally started to investigate the QA’s build environment.

Everything was identical except that the QA team had updated their version of the operating system (OS) and compiler on their machines. In their version of the OS, modifications to the task prioritization handling had been made. The code logic was sound, but the running task was being blocked by another task with the same priority. Decreasing either one of the competing task priorities by one solved the problem.

Because code logic was the first suspected culprit, we spent a significant amount of labor and time investigating and resolving the issue. Looking into the problem consumed more time from more development and QA engineers. We held additional meetings and status reports and pushed out other assignments. The ripple effect is not entirely known, but substantial costs were incurred. If a centralized management and deployment of development environments with containers had been in place, it would have kept both the development and the QA team’s deployment environment in sync, and this problem could have been entirely avoided.

Embedded Deployment Strategies

Software development using containers can be configured and deployed in many ways. Organizations get to determine and evolve in the use of containers based on existing tools being used, levels of automation desired, and team organization.

A strategy could be one where a common container is used on the developer's host machine to make, build, and run their applications. This ensures that every developer is using the exact same set and version of build tools and run environment.

Many embedded teams employ Jenkins, GitHub, Azure, GitLab, and others for continuous integration and continuous delivery (CI/CD). In this example there are two containers. One container makes and builds the application, while the other runs the application. This helps express the flexibility that containers offer.

Organizations may also have graphically distributed teams. Having libraries of container images can facilitate sharing containers and eliminate reinventing the wheel. This promotes efficiency by reusing existing containers for different purposes. Shared containers ensure quality throughout the development supply chain.

Embedded Test Automation in the CI/CD Pipeline

Containers are also being used for software testing in the DevOps workflow. By integrating containerized testing solutions into the CI/CD pipeline, organizations can perform static analysis to ensure compliance with standards like MISRA C:2012, MISRA C++ 202x, AUTOSAR C++14, CERT, CWE, OWASP, and others.

Software test automation tools like Parasoft C/C++test offer a container that can be found in Docker Hub. In addition, unit testing can also be performed, including structural code coverage of statements, branches and/or modified condition decision coverage (MC/DC). Then, only upon successful completion of testing, will the software be committed into the master branch.

This containerization deployment within the build process produces amazing efficiencies in code development and code quality. Having multiple engineers working in parallel in this quick and automated continuous integration cycle, ensures that a solid software base is produced and maintained throughout the entire product life cycle.

Conclusion

Organizations building embedded real-time safety- and security-critical systems are adopting a DevOps workflow that includes containers. Others are in the process of adopting a containerized strategy. The ones that have constructed a CI/CD pipeline and have been using it for several years, report that they have been able to better predict the delivery of software and easily accommodate changes in requirements and design.

Along with improved productivity and lower costs in testing, development teams have reported an increase in product quality and time to market. Furthermore, embedded organizations have informed us of measurable drops in QA problem reports and customer tickets.

References

The Linux Foundation Projects – Open Container Initiative

Online: https://opencontainers.org/

Ricardo Camacho, Director of Regulatory Safety and Security Compliance for Parasoft’s embedded testing solutions, has over 30 years expertise in Systems & Software engineering of embedded real-time, safety, and security critical systems.