Developer Pairs Embedded Compute with AI for Mobility Device

October 05, 2023

Story

Autonomous mobility conversations tend to focus on cars or trucks, but one developer in India is leveraging AI and embedded computing to bring autonomous navigation capabilities to wheelchairs.

Kabilan KB is an undergrad majoring in robotics and automation at the Karunya Institute of Technology and Sciences, and has earned several certifications from the NVIDIA Deep Learning Institute. Thanks to his training in NVIDIA tech, he is leveraging the NVIDIA Jetson platform for edge AI and robotics for his autonomous wheelchair.

The young engineer said he was thinking of his cousin who has mobility issues when he conceived the project, and that he hopes it will be helpful to other people who might have similar mobility struggles but can’t use a manual or traditional motorized wheelchair. The project was funded by the Program in Global Surgery and Social Change, which is jointly positioned under the Boston Children’s Hospital and Harvard Medical School.

The young engineer said he was thinking of his cousin who has mobility issues when he conceived the project, and that he hopes it will be helpful to other people who might have similar mobility struggles but can’t use a manual or traditional motorized wheelchair. The project was funded by the Program in Global Surgery and Social Change, which is jointly positioned under the Boston Children’s Hospital and Harvard Medical School.

According to a NVIDIA, the wheelchair uses lidar, cameras, and depth sensors to perceive its surroundings and plan a saf e path for the user.

e path for the user.

“A person using the motorized wheelchair could provide the location they need to move to, which would already be programmed in the autonomous navigation system or path-planned with assigned numerical values,” Kabilan KB said. “For example, they could press ‘one’ for the kitchen or ‘two’ for the bedroom, and the autonomous wheelchair will take them there.”

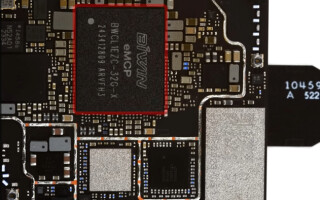

He used the NVIDIA Jetson Nano Developer Kit for processing the real-time data coming in from the environmental sensors, and then a computer vision AI parses that data to find potential obstacles that the chair needs to avoid. According to NVIDIA, the dev kit is the brain of the autonomous system. The Jetson generates the map and the path that will be free from collisions before the device begins the route, and then updates that path based upon real-time sensor data.

The Jetson kit and sensors are attached to a basic motorized wheelchair and retrofitted for autonomy. He said that he wanted to make sure it was as cost-effective and accessible as possible.

Kabilan trained the AI algorithms for the autonomous wheelchair using YOLO object detection on the Jetson Nano. He chose the Robot Operating System, or ROS, a popular software for building robotics applications, for the underlying software.

“The NVIDIA Jetson Nano’s real-time processing speed prevents delays or lags for the user,” he said. In the future he would like to make the chair controllable by thought alone, perhaps by using EEG readings. “I want to make a product that would let a person with a full mobility disorder control their wheelchair by simply thinking, ‘I want to go there.’”

Check out the technical components of the autonomous wheelchair on his blog.