NVIDIA Jetson AGX Orin, Isaac Sim AI Updates Bring Omniverse Closer to the Embedded Edge

March 23, 2022

Story

The new Jetson AGX Orin Developer Kit delivers 275 TOPS of performance at 15-60 W, and is pairing with advances in the NVIDIA Isaac and JetPack SDKs to accelerate AI development, deployment, and bring the output of Omniverse tools to autonomous robots at the edge.

A few years ago everyone’s boss was asking them for an IoT strategy, prototype, and then inevitably an implementation that generated noticeable ROI. That cycle is repeating, only this time with artificial intelligence and machine learning. The big problem is that AI is harder and takes longer.

Most of the enabling technologies required to create an IoT proof of concept were already in place by the time technical and business leadership got around to asking for them. With AI, on the other hand, the process of creating a model that is even remotely useful is long and tedious, and consists of gathering massive data sets, labeling the data with tags, comparing different model types before selecting the best one for your use case, then training the selected model against the labeled data set. Then repeating that process again and again until you reach acceptable levels of performance and accuracy.

This process can take a year or more, and that doesn’t even consider hardware.

NVIDIA has released a series of products and capabilities over the last six months to help AI developers accelerate this process. Notably, at GTC Fall 2021, the company introduced the Omniverse Replicator engine, a synthetic data generation tool that helps developers train AIs. As part of the company’s Isaac and Drive Sim simulation environments, Replicator produces and labels domain-specific, ground-truth data that can augment real-world data sets manually captured and classified by human engineers and data scientists. This alone can shave months of development time off the creation of autonomous vehicles, robots, and other AI-powered machines.

But that’s just step one. After data is generated by the Replicator, it needs to inform a model. This is addressed by the Train, Adapt, Optimize (TAO) Toolkit, which has been enhanced over the past few years to support the training of existing models with synthetic data from tools like the Omniverse Replicator. As you can see in the diagram above, the workflow integrates these models with application frameworks like NVIDIA DeepStream for computer vision, NVIDIA Riva for AI-enabled speech use cases, and Isaac ROS, which through a collaboration with the Open Source Robotics Foundation (OSRF) now supports the Robot Operating System (ROS) to accelerate the development of autonomous mobile robots (AMRs).

These SDKs and toolchains are optimized for use with the NVIDIA JetPack SDK – the OS and application development environment tailored for the company’s Jetson line of embedded system on modules (SoMs) and development kits.

Introducing Orin: Another Step in Shrinking the AI Development Lifecycle

NVIDIA’s stronger emphasis on the integration of application frameworks and OS SDKs isn’t new, but shouldn’t go unnoticed as it represents another step in the AI systems engineering lifecycle and reducing time to market for autonomous machines. For example, the aforementioned blending of ROS and the Isaac environment has led to innovations like Isaac Nova Orin, a compute and sensor reference platform that leverages ROS-based GEMs, which are packages for computer vision and image processing in AMR perception stacks.

The inputs required for these functions, as defined by the Nova Orin reference platform, utilize a sensor suite of up to six cameras, three lidars, and eight ultrasonic sensors. Of course these can be simulated and Nova Orin includes tools that allow developers to test their designs in the NVIDIA Omniverse, which uncoincidentally pair nicely with the features of the Omniverse Replicator. However, according to the company, prototyping this reference platform in the real word requires some 550 TOPS of computational horsepower.

The drive to reduce AI development time from what has been months or years down to weeks or days has resulted in an evolution of the NVIDIA software suite that now addresses everything from data capture to actuation. And the evolution of this software suite warrants yet another iteration of high-performance, power-conscious edge AI computing hardware that was announced yesterday at the company’s bi-annual GTC event: the NVIDIA Jetson AGX Orin developer kit and SoMs.

The Jetson AGX Orin Developer Kit delivers what you’d expect from a new NVIDIA hardware platform – more performance, equivalent power, and a reasonable price point for developers looking to implement streamlined AI models on an edge inferencing target. The developer kit, available now for $1,999, delivers up to 275 TOPS of INT8 sparse computing performance at a configurable power consumption of 15 to 60 W on the strength of the following compute subsystem:

- Ampere GPU with 2048 CUDA cores and 64 Tensor cores

- 12-core Arm Cortex-A78AE CPU

- Dual NVIDIA Deep Learning Accelerators (DLAs)

- Dual Programmable Vision Accelerators (PVAs)

- H.265 Video Encode/Decode (4K60 to 1080p30 compatible with decode up to 8K30)

These are supported by 32 GB of 256-bit LPDDR5 memory with bandwidths up to 204.8 GBps, enough to capitalize on the massive parallelism of the GPU and tensor cores in demanding AI inferencing deployments. There is also 64 GB of eMMC 5.1 storage onboard.

This lineup delivers an 8X performance increase over the NVIDIA Jetson AGX Xavier, including a 3.3x increase in AI execution that jumps to 4.9x when paired with the imminent version 5.0 of the NVIDIA JetPack SDK. This is achieved despite occupying the same 110mm x 110mm x 71.65 mm form factor as Xavier, which includes a module, carrier, and thermal solution.

On the Jetson AGX Orin developer kit, these capabilities are made accessible via a variety of interfaces capable of supporting use cases like the Nova Orin reference design, including:

- 16-lane MIPI CSI-2 connector

- x16 PCIe slot (x8 PCIe Gen4)

- 10 GbE via RJ45 port

- 2x USB 3.2 Gen2 with USB-PD support (Type C), 2x USB 3.2 Gen2 (Type A), 2x USB 3.2 Gen1 (Type A), USB 2.0 (Micro B)

- A variety of pin headers for serial communications, automation, audio, debug, fans, etc.

- M.2 Key (M, E) and microSD slots for expansion, storage

In addition, the developer kit comes with an 802.11ac/abgn wireless Network Interface Controller and USB-C power adapter and cord. It also maintains pin and software compatibility with the Jetson AGX Xavier platform for maximum application portability.

AI: From Development in the Metaverse to Deployment at the Edge

Two Jetson AGX Orin developer kits are enough to satisfy the requirements of the Nova Orin reference design, which until now would likely have required a full-blown edge server. Removing those form factor and power consumption restrictions are key to enabling the proliferation of accurate, low-latency edge AI in systems ranging from AMRs to autonomous vehicles.

But because you can’t realistically drop developer kits into a production system, NVIDIA announced a new line of SoMs based on Jetson AGX Orin that will be available in Q4 of this year. Seen in the slide below, the modules are a subset of the developer kit that come in different compact form factors (AGX or NX) and performance levels but feature the same connector as the rest of the Jetson family of modules.

For those migrating from the AGX Xavier platform, the closest analog is the Jetson AGX Orin 32 GB that prices out at $899 in quantities of 1,000-plus.

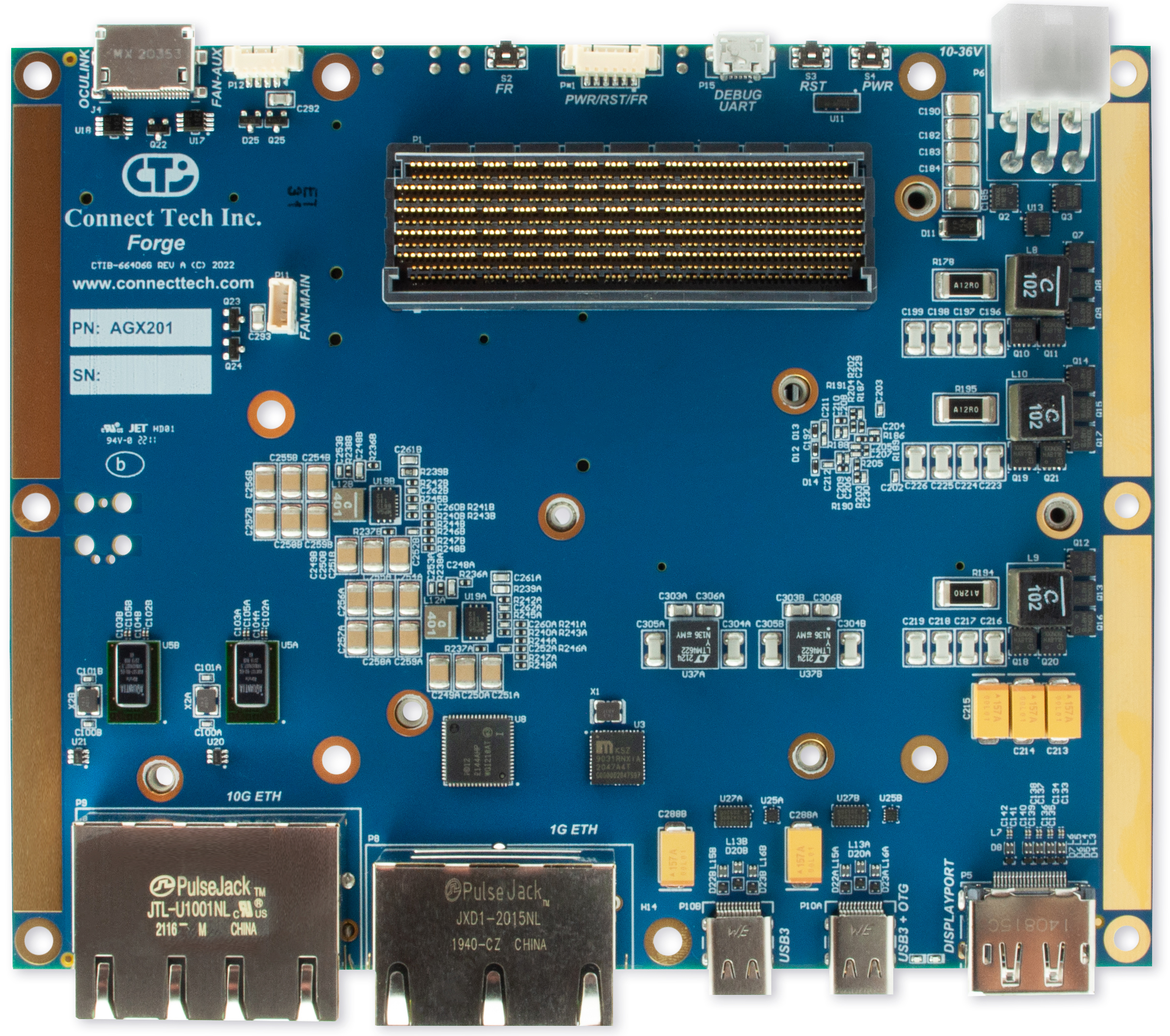

Embedded hardware companies are already supporting these modules with carrier board solutions such as the ConnectTech Forge Carrier for NVIDIA Jetson AGX Orin that brings out the module’s 16-lane MIPI connector, M.2 Key slots, USB 3.2 interfaces, and Ethernet for system design and expansion.

Of course, that’s not an end. Designing and deploying production systems based on technologies like this is actually more of a beginning.

Software capabilities like NVIDIA Metropolis continue to transform these edge AI platforms into what will become the closest thing to human sentience in known existence. Metropolis is yet another application framework that combines visual sensor data from “trillions of endpoints” with AI to help inform system design and operation. In other words, with metropolis, a factory surveillance camera could be an input that assists with AMR navigation.

If you feel like things are changing, and changing fast, you’re right. They are. And that’s okay. As NVIDIA CEO Jensen Huang said in a press event, “deep learning is not just a new application like rasterization or texture mapping or some feature of technology. Deep learning and machine learning are a fundamental redesign of computing. It's a fundamentally new way of doing computing and the implications are quite important.

"The way we write software, the way that we maintain software, the way that we continuously improve software has changed, number one," he continued. "Number two, the type of software we can write has changed. It's super human capabilities, software we could never write before. And the third thing is the entire infrastructure of providing for the software engineers and the operations, what is called MLOps, that is associated with developing this end-to-end fundamentally transforms companies."

Fortunately, kits like Jetson AGX Orin, environments like JetPack and Isaac Sim, and tools like developed in none other than the metaverse like the Omniverse Replicator are now availabe to facilitate this transition in a way that streamlines AI development and shrinks time to deployment in ways that were inconceivable just a year ago.

Tell your boss "it's go time."

- Embedded developers can get started today by joining the Jetson developer community at https://developer.nvidia.com/embedded/community.

- Check back for more on the NVIDIA Jetson AGX Orin in our Dev Kit Weekly Hardware Review & Raffle video segment and an upcoming article series on defining and implementing technical interoperability requirements for the industrial metaverse.