SOCAMM: The New Memory Kid on the AI Block

August 28, 2025

Blog

The continued evolution of artificial intelligence (AI), particularly in energy-sensitive edge inference and dense AI server workloads, demands radical innovation in system memory. SOCAMM (Small Outline Compression Attached Memory Module) emerges as a purpose-built memory architecture designed by NVIDIA and developed in partnership with Micron, Samsung, and SK Hynix to bridge a critical performance and efficiency gap in AI-centric platforms. By combining the high-bandwidth and energy efficiency of LPDDR5X memory with a modular, socketed form factor, SOCAMM redefines the interface between memory and compute.

How does SOCAMM measure up against HBM3E, GDDR7 and DDR5 RDIMM?

Why LPDDR isn’t enough

The acceleration of AI computing, particularly deep learning inference and model deployment at the edge, has placed unprecedented demands on memory subsystems. Historically, memory technologies such as DDR4 and DDR5 RDIMMs have supported general-purpose computing platforms with acceptable performance-per-watt metrics. However, as AI inference increasingly migrates from cloud-bound GPU clusters to resource-constrained edge devices and modular servers, a new memory paradigm is required—one that combines bandwidth, low latency, and efficiency within a small thermal envelope.

LPDDR memory has proven effective in mobile and automotive environments due to its low power characteristics. Yet its soldered integration restricts reuse, repair, and field upgrades, introducing lifecycle limitations and driving up total cost of ownership (TCO). SOCAMM was conceived to reconcile this contradiction: a compact, modular memory interface offering LPDDR-class power efficiency while enabling the flexibility and serviceability long associated with RDIMMs.

Do we really need SOCAMM?

The demand for SOCAMM is rooted in a confluence of architectural and commercial imperatives. First, edge inference applications—from autonomous machines and industrial AI controllers to smart retail systems and compact inference clusters—require memory solutions with extreme energy efficiency, physical modularity, and field replaceability. LPDDR5X excels in raw efficiency but has traditionally been locked into soldered-down configurations, reducing system adaptability.

Second, the trend toward modular computing—epitomized by NVIDIA’s Grace CPU and Grace-Hopper Superchips—necessitates high-bandwidth memory solutions that match the power and spatial profile of chiplet-based and AI-native architectures. SOCAMM introduces socketed LPDDR5X modules into these platforms, enabling AI OEMs and hyperscalers to dynamically scale capacity, swap failed memory units, or extend system lifespan without full board replacements.

Third, the AI market’s need for right-sized, sustainable hardware has led to new procurement models emphasizing cost-per-inference, upgradability, and power-aware design. SOCAMM addresses all three metrics simultaneously.

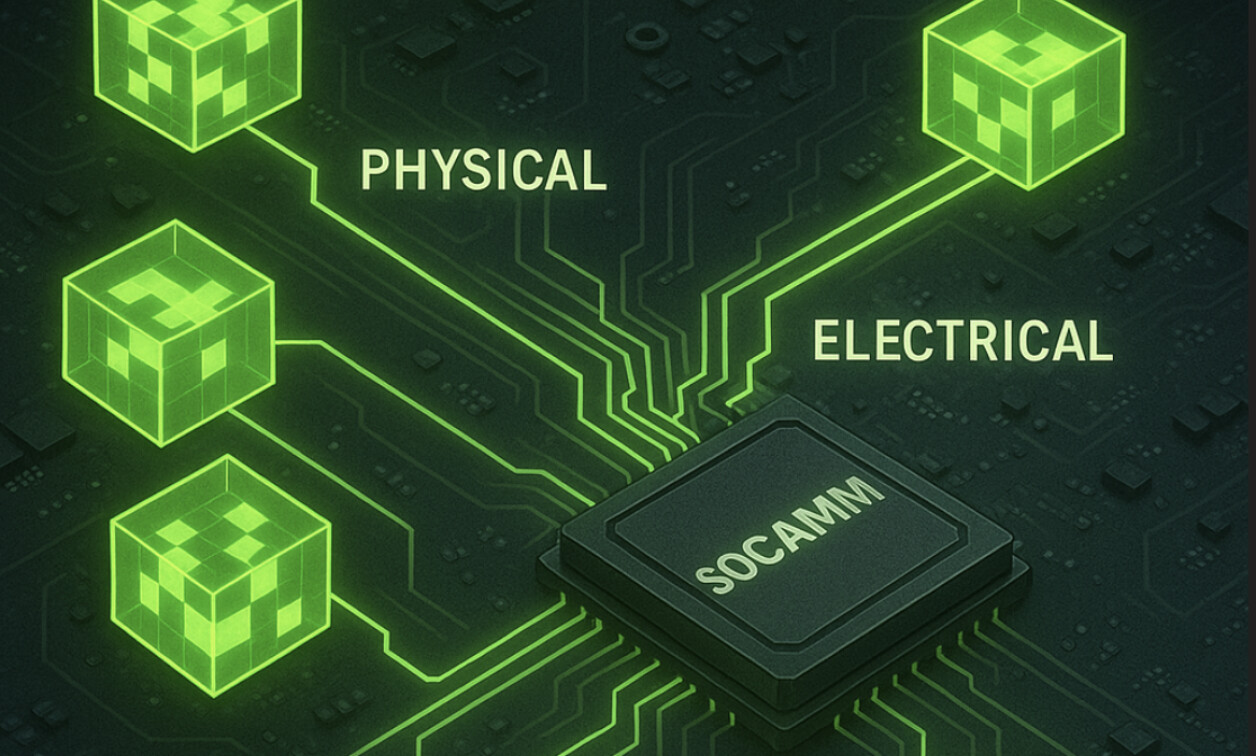

SOCAMM Architecture: Physical, Electrical, and System Integration

So let’s take a look at its physical and electrical features, and how they come together to support AI applications.

Physical Design

SOCAMM modules measure approximately 14 × 90 mm, occupying roughly one-third the footprint of a standard RDIMM. The form factor is designed to align alongside modular compute packages such as NVIDIA’s Grace CPU, enabling tight integration without obstructing airflow or thermally critical components. Modules are attached via screw-secured sockets, allowing easy replacement and enhanced mechanical stability.

Internal Stacking and Capacity

Each SOCAMM module can integrate up to 4 high-density LPDDR5X stacks, with each stack supporting up to 16 memory dies. This results in a total module capacity of 128 GB, comparable to mid-to-high capacity RDIMM configurations. These dies are organized using 64-bit channels to support the high-throughput requirements of AI inference.

I/O and Signaling

SOCAMM supports up to 694 contact pads, a substantial increase over the 260 pins typically found in DDR5 RDIMMs. This I/O density enables higher aggregate bandwidth per module. The memory operates at speeds of 6400 to 8533 MT/s, with next-generation SOCAMM 2 modules expected to exceed 10,000 MT/s with LPDDR6X signaling. These modules interface directly with CPUs and accelerators via a low-voltage LPDDR-compatible PHY and routing layer optimized for minimal signal reflection and jitter.

Power and Thermal Profile

LPDDR5X technology enables SOCAMM to operate at ~1.05V, delivering a power savings of 30–35% compared to DDR5 RDIMM solutions. Passive thermal designs suffice in many edge environments, while compact servers may employ directed airflow or vapor chamber solutions depending on system envelope.

These features make SOCAMM ideal for the following application domains:

- Compact AI workstations (e.g., NVIDIA “Project Digits”)

- Grace Blackwell server platforms

- Edge-AI inferencing modules in medical, automotive, and manufacturing

- Data center cold-storage memory expansion (hyperscalers)

Comparative Analysis: SOCAMM vs. HBM, GDDR7, DDR5 RDIMM

The following table provides a detailed comparison of SOCAMM against key memory technologies across technical and commercial metrics.

|

KPI |

SOCAMM (LPDDR5X) |

HBM3E/4 (Stacked) |

GDDR7 |

DDR5 RDIMM |

|

Form Factor |

14×90 mm, socketed |

Co‑packaged/soldered |

On‑board BGA |

DIMM (long) |

|

Capacity per Module |

Up to 128 GB |

Up to ~36 GB/stack |

Up to 32 GB (typ.) |

Up to 256 GB+ |

|

Peak Bandwidth / Module |

~100–150 GB/s |

0.8–1.2+ TB/s |

~150–200 GB/s |

~50–80 GB/s |

|

Data Rate (per pin) |

LPDDR5X 6400–8533 MT/s |

HBM3E/4 very high |

28–32+ Gbps |

4800–6400 MT/s |

|

Power Efficiency |

Excellent (LPDDR‑class) |

Good–Moderate |

Good |

Moderate–Poor |

|

Latency (ns) |

~30–45 |

~5–10 |

~10–20 |

~70–90 |

|

I/O Count |

Up to ~694 pads |

Very high TSV/I/O |

High |

288–560 pins (var.) |

|

ECC / RAS |

Platform‑dependent |

Yes (stack‑level + link) |

Limited/platform‑dep. |

Yes (server‑grade) |

|

Thermal Profile |

Low–Moderate |

High (aggressive cooling) |

Moderate |

Moderate–High |

|

Modularity |

High (socketed) |

None |

None |

High (slot‑based) |

|

Upgrade Path |

Direct swap/scale |

Board/module respin |

Board rework |

DIMM swap |

|

Cost / GB |

Moderate |

Very High |

Moderate–High |

Low–Moderate |

|

Cost / GB/s |

Favorable for inference |

High |

Moderate |

Moderate |

|

Supply Maturity (2025) |

Early ramp |

Mature |

Initial ramp |

Mature |

|

Standardization |

Emerging |

JEDEC |

JEDEC |

JEDEC |

|

Primary Use |

AI inference, edge, AI PC |

GPU training/HPC |

Graphics/AI accelerators |

General compute servers |

|

Board Area Footprint |

Small |

Co‑packaged |

Moderate |

Large |

|

Sustainability Impact |

High (upgradeable) |

Low |

Low |

Moderate |

What lies ahead for SOCAMM?

Micron leads LPDDR5X SOCAMM production for 2025 deployments. Samsung and SK Hynix will launch SOCAMM 2 modules in 2026. SOCAMM is projected to represent 25–30% of AI inference memory by 2027. JEDEC standardization is underway for long-term platform adoption.

SOCAMM represents a pivotal memory architecture combining modularity, power efficiency, and scalability for modern AI workloads. Its evolution into SOCAMM 2 with LPDDR6X marks a long-term trend toward flexible, sustainable, and high-performance memory platforms in AI computing.