Meeting the Demanding Energy Needs of AI Servers with Advanced Technology

October 23, 2025

Blog

Artificial intelligence (AI) is rapidly transforming industries, driving innovation in everything from healthcare and finance to autonomous vehicles and natural language processing. This revolution is powered by AI servers that deliver unprecedented computational performance.

However, the exponential growth in AI workloads—including the widespread adoption of large language models (LLMs)—has led to a dramatic increase in power consumption, creating new challenges for data centers worldwide. As AI models become more complex and the number of AI servers grows, the demand for robust, efficient, and scalable power supplies has never been greater.

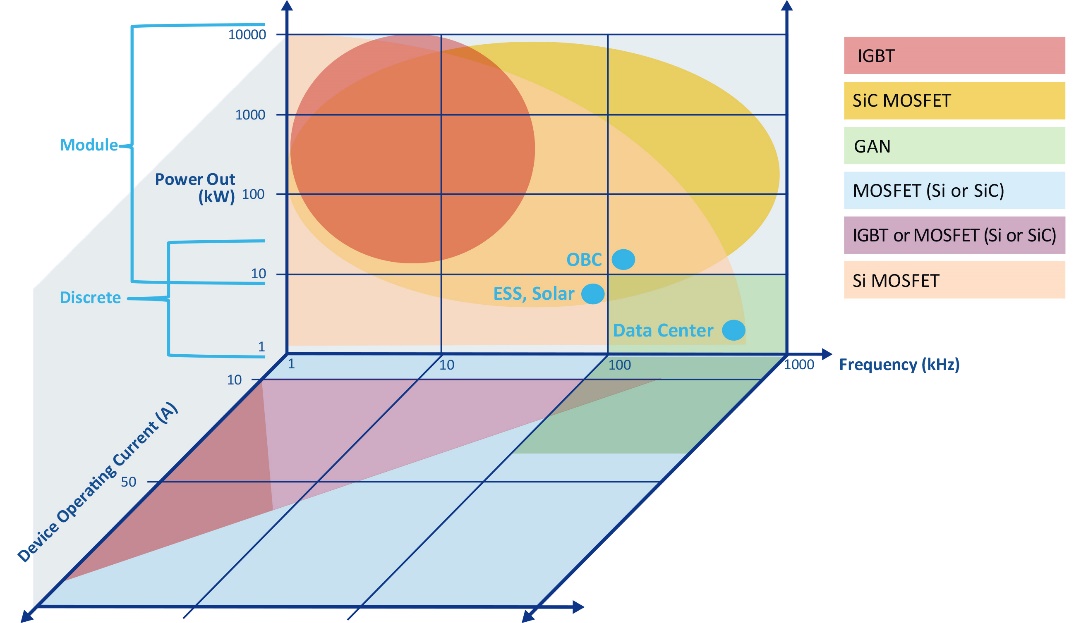

Modern data centers are evolving to meet these escalating demands. The focus is shifting toward higher energy efficiency, advanced power management, and the integration of wide-bandgap semiconductors such as silicon carbide (SiC) and, in some cases, gallium nitride (GaN) to reduce energy losses.

Security remains paramount, particularly as AI applications handle vast quantities of sensitive data. This environment demands robust measures, such as hardware-based encryption and secure boot mechanisms, alongside vigilant real-time threat detection. To address the computational intensity of AI inference and training, especially with workloads driven by large language models, data centers are embracing new approaches in power delivery and voltage regulation, as well as implementing advanced thermal management. The need for scalability and flexibility has become increasingly important, prompting facilities to adopt modular infrastructure and explore innovative cooling strategies. As these trends continue to evolve, AI-driven data centers are positioned to achieve greater efficiency and security while maintaining the agility necessary to support future advancements.

Challenges in Powering AI Servers

Escalating Power Demands and Density

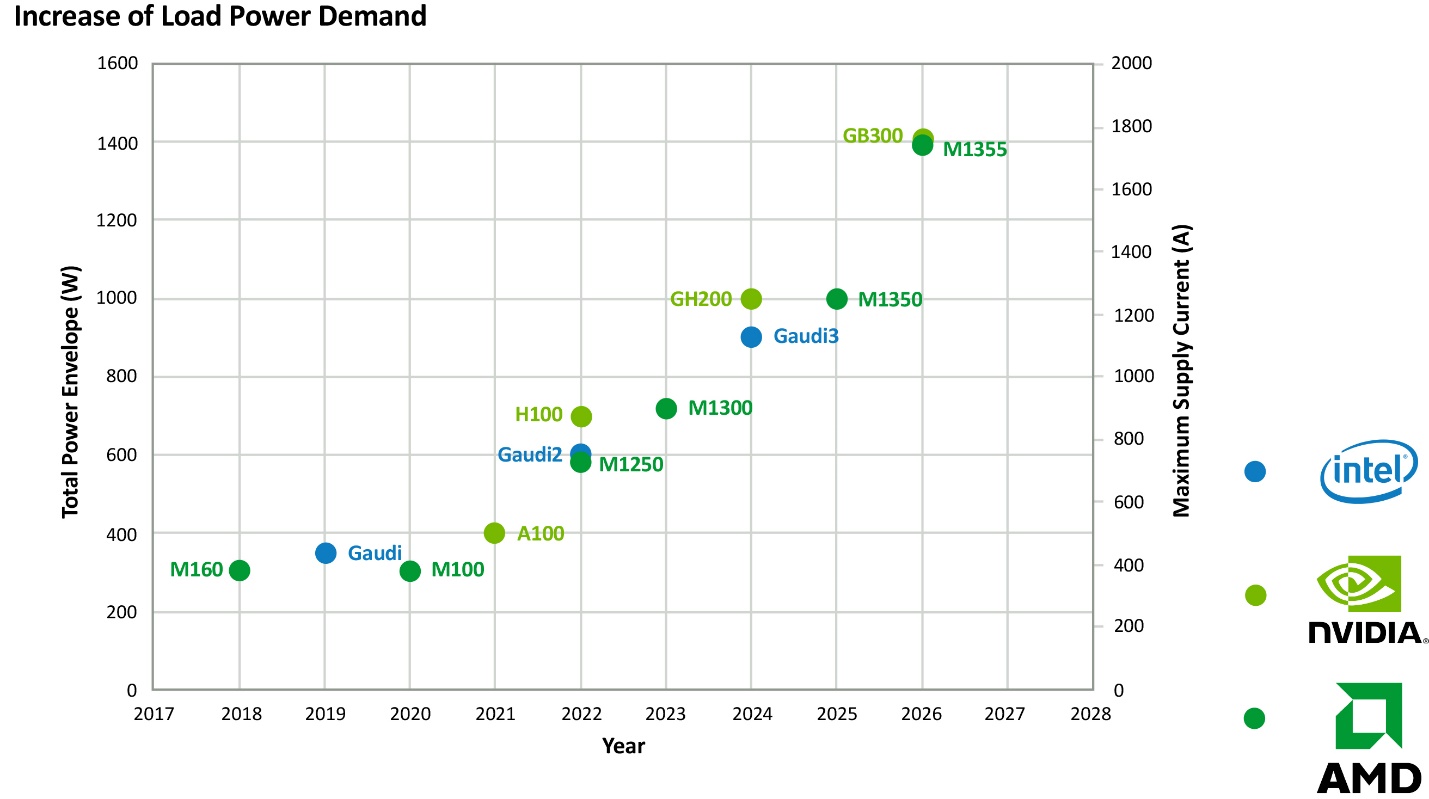

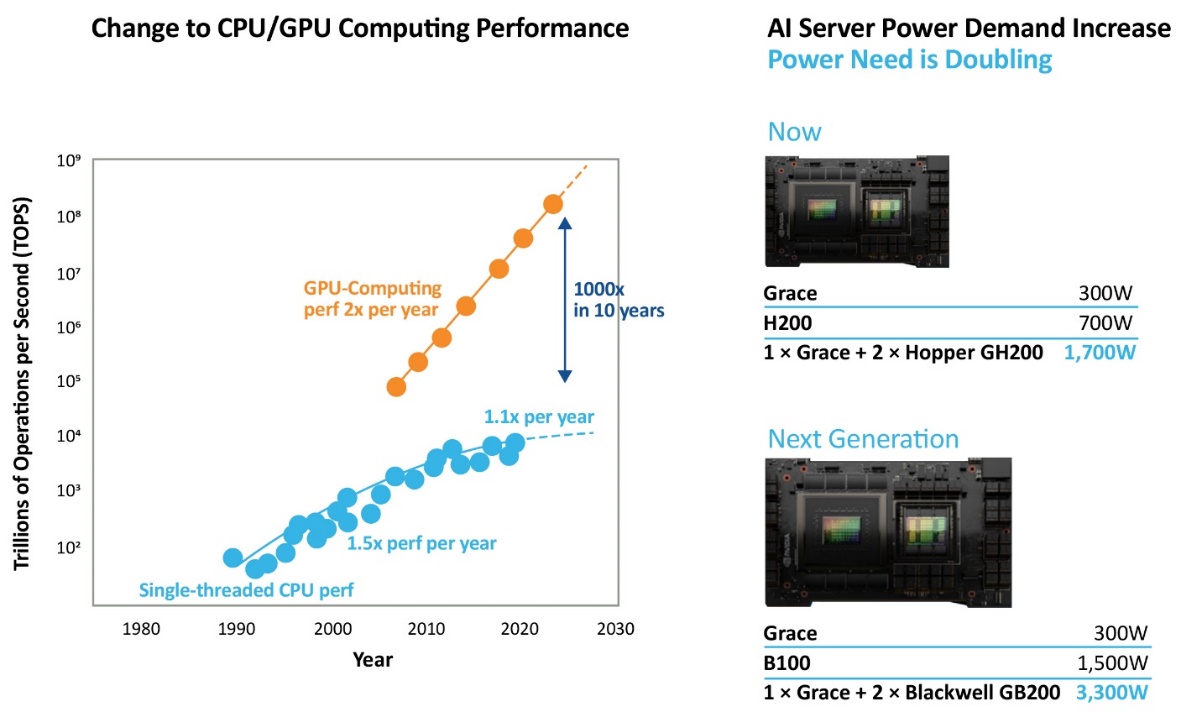

AI servers are the backbone of modern data centers, driving advanced tasks such as deep learning, machine learning, large language models and real-time analytics. These servers require significantly more power than traditional enterprise servers. As data centers expand their AI capabilities, they face the challenge of supplying sufficient power while maintaining efficiency to manage costs and minimize environmental impact. Energy consumption in data centers has surged, with AI workloads potentially doubling energy use compared to traditional tasks. The global energy demand for data centers is growing by 10-15% annually, with AI now accounting for 10-20% of total energy use. AI accelerator servers carrying heavy computational loads are the primary energy consumers within this infrastructure.

The shift from traditional CPU-centric architectures to GPUs and specialized accelerators is driving a continued surge in power demand. Modern AI servers now consume two to three times more power than traditional enterprise servers, with high-performance AI racks exceeding 50 kW per rack compared to 5-15 kW per rack in conventional data centers. GPUs alone can consume 300-700W per card. As AI workloads scale, next-generation data centers must implement advanced power distribution architectures, high-efficiency voltage regulators, and innovative cooling solutions to sustain operational efficiency and reliability.

Microchip Technology addresses these escalating power demands with a comprehensive portfolio of high-efficiency MOSFETs, SiC FETs, and intelligent gate drivers. These advanced power devices are engineered to deliver superior switching performance, reduced conduction and switching losses, and enhanced thermal management critical for supporting the high-power densities required by AI servers. Microchip’s SiC MOSFETs enable higher switching frequencies, which reduce the size and weight of magnetic components, resulting in more compact and efficient power supply designs. Intelligent gate drivers offer precise control, integrated protection features, and robust diagnostics, ensuring reliable operation even under the most demanding computational loads. This enables data centers to deploy more powerful AI servers without exceeding power or thermal limits.

Efficiency and Thermal Management

As power densities increase, delivering more power within the same or smaller physical footprint becomes a top priority. Traditional power supply units (PSUs) and air-cooling methods are reaching their operational limits, as higher power densities generate more heat and increase the risk of energy losses through inefficiency. Inefficient power conversion not only raises operational costs but also contributes to a larger carbon footprint, which is increasingly scrutinized by regulators and customers alike. Effective thermal management is essential to prevent overheating, maintain system reliability, and extend the lifespan of critical components. Data centers must find ways to maximize efficiency and manage heat dissipation, all while minimizing environmental impact and meeting sustainability goals.

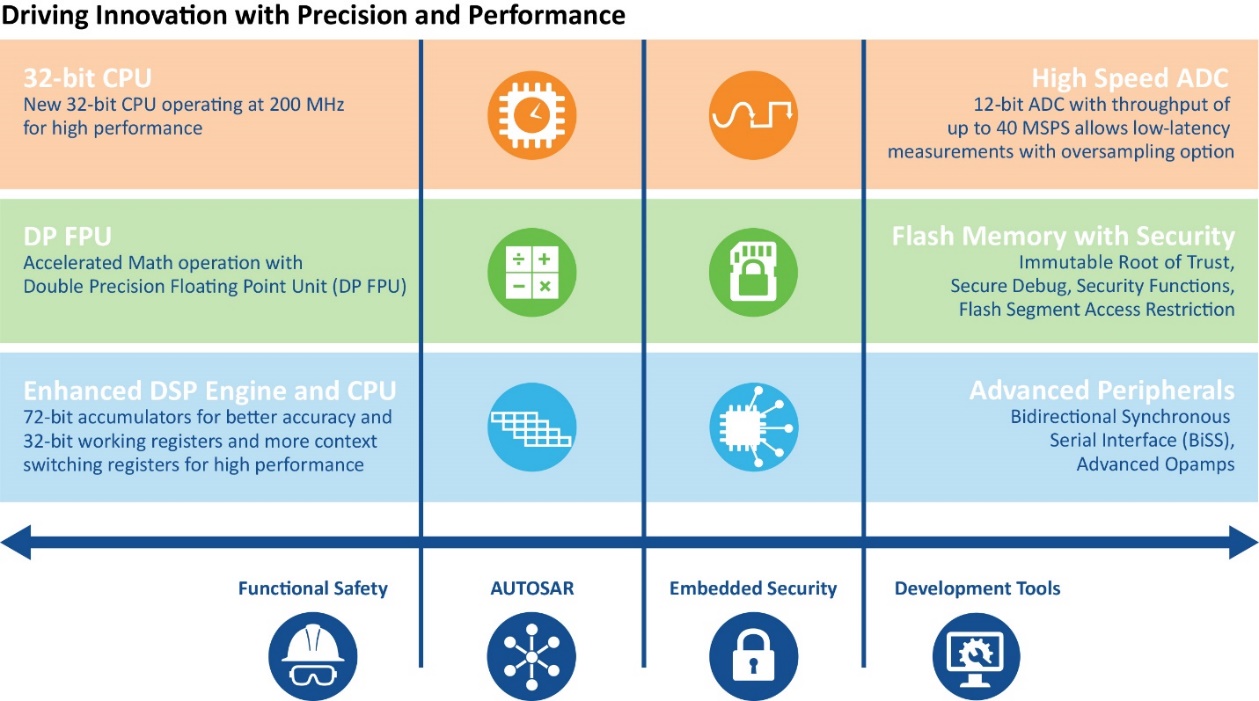

Microchip’s dsPIC® Digital Signal Controllers (DSCs) are at the heart of its digital power solutions, combining the real-time control of a microcontroller with the high-speed mathematical processing of a DSP. These controllers enable precise control of power stages, fast transient response, and the implementation of sophisticated digital control algorithms. The dsPIC33A family, for example, offers high clock speeds, advanced Pulse Width Modulation (PWM) output,s and high-resolution ADCs, enabling precise control of power stages, fast transient response, and implementation of sophisticated digital control algorithms.

Intelligent thermal management and power monitoring may include temperature sensors, fan controllers, and power monitoring ICs that enable real-time tracking of thermal and electrical parameters. These devices can be seamlessly integrated with DSCs to implement closed-loop cooling strategies, optimize fan speed,s and trigger alerts or shutdowns in response to abnormal conditions, ensuring safe and efficient operation of high-density AI servers.

Security and Data Integrity

With AI servers processing vast amounts of sensitive data, robust security protocols are essential. Heightened risks of cyber threats and data breaches demand the implementation of advanced hardware-based security measures and secure boot mechanisms. Organizations are also required to adhere to rigorous industry standards, such as NIST 800-193, Common Criteria (CC), and FIPS 140-3. The Open Compute Project (OCP) has set high security standards, focusing on hardware root-of-trust, firmware integrity, and secure boot processes. These safeguards help ensure that AI data servers can verify and authenticate hardware and software before operation, reducing the risk of cyber threats.

Microchip integrates robust security features directly into its controllers and power management ICs. These include hardware root-of-trust, secure boot, cryptographic accelerators, and support for industry standards. Hardware root-of-trust ensures that only verified firmware and software can run on the system, while secure boot mechanisms prevent unauthorized code from executing during startup. Cryptographic accelerators enable fast, hardware-based encryption and decryption, protecting sensitive data both at rest and in transit. These features help data centers comply with modern security requirements, safeguard against evolving cyber threats, and maintain the integrity and confidentiality of AI workloads.

Scalability and Flexibility

The increasing complexity of AI workloads is driving the need for greater scalability and flexibility in AI data servers. The rise of large language models, real-time analytics, and AI-driven applications requires infrastructure that can be dynamically scaled to handle surging computational demands. AI training clusters are expanding rapidly, with some hyperscale data centers now deploying GPU architectures that exceed 100 kW per rack. The shift toward modular server architecture allows data centers to upgrade and reconfigure hardware without a complete system overhaul, reducing costs and improving adaptability. Composable infrastructure solutions enable resources like computing, storage, and networking to be dynamically allocated based on workload demands, ensuring AI data servers can scale seamlessly as new models and applications emerge.

Microchip’s modular power management solutions—including digital controllers, power modules, and reference designs—are designed to support the scalability and flexibility required by modern AI workloads. These solutions can be easily integrated into modular server architectures, allowing data centers to scale power delivery infrastructure in line with computational demands. Composable infrastructure is supported by Microchip’s digital controllers, enabling dynamic allocation of computing, storage and networking resources as workloads evolve. This approach reduces costs, improves adaptability, and ensures that AI data servers can scale seamlessly as new models and applications emerge.

Comprehensive Development Ecosystem

The rapid evolution of AI demands that data center operators and server manufacturers accelerate their development cycles to remain competitive. Designing, validating, and deploying advanced power systems for AI servers is complex and can lead to delays and increased costs without the right resources. To minimize time-to-market and reduce design risk, access to proven reference designs, robust development tools, and expert technical support is essential.

Conclusion

The exponential growth in AI server power requirements presents significant challenges for data center operators and technology providers. By adopting advanced power devices, innovative cooling solutions, robust security protocols, and intelligent digital power management, the industry can effectively address these challenges. Microchip Technology’s high-efficiency MOSFETs, sophisticated gate drivers, and dsPIC DSCs with advanced DSP capabilities are at the forefront of enabling power supplies that deliver exceptional performance and energy efficiency.

By utilizing advanced power devices, digital controllers, integrated security features, and comprehensive development tools, data center operators and server manufacturers are able to design power supply systems that address the rigorous demands of AI workloads, including those driven by large language models. These technologies enable higher efficiency and power density, enhanced reliability and thermal management, robust security, scalability, flexibility, and accelerated development cycles. Continued innovation across the industry is helping next-generation data centers reach new benchmarks in performance, efficiency, and security, supporting the ongoing evolution of AI infrastructure.

Josue Navarro brings five years of experience in the semiconductor industry. He began his career as a process engineer at Intel and has since transitioned into product marketing with Microchip Technology, where he supports both customers and his company in raising awareness and developing system designs utilizing Microchip’s dsPIC Digital Signal Controllers (DSCs). Navarro holds a Bachelor of Science in Electrical Engineering from Arizona State University.