Fog computing with minimal resources

August 11, 2016

Edge devices don't always have the compute power to make compute-intensive decisions. Hence, data is collected at the edge and transferred to the clou...

Edge devices don’t always have the compute power to make compute-intensive decisions. Hence, data is collected at the edge and transferred to the cloud, where the heavy compute engines reside. The numbers are crunched and the information is sent back to the edge where some action takes place. The upside to such a solution is that the edge devices can remain relatively inexpensive, allowing the number crunching to occur where the processing power already lies — in the cloud.

One downside to this approach is that you may be pushing large amounts of data up to the cloud, which can be expensive, as that’s one way that the carriers make their money. Second, and potentially more important depending on the application, is that there’s a time delay to send, compute, and receive the information. If it’s a real-time, mission-critical application, such as something in medical or automotive, you may not have the luxury of time.

Thanks to Sendyne’s dtSolve, there’s a way to handle those computations at the edge without packing on the compute resources. The technology is used to accurately predict what will happen in the future using physics-based models.

In the words of John Milios, Sendyne’s CEO, “If you can model the physics of the system you wish to control, that model can accurately predict the future.”

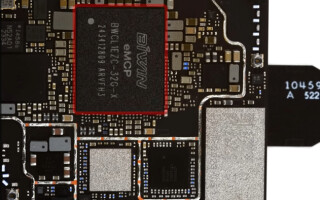

You describe your model as a set of differential equations, then set your parameters. And that’s it. dtSolve runs on a PC or a simple microcontroller-based system, such as an ARM M4. Hence, data is crunched/produced at the edge in near real-time. Fog computing—just like they drew it up.

Check out the video. It tells the story a little better than I do.