Ensuring a safe medical design

March 27, 2017

Until recently, developers and manufacturers of medical devices have not been required to consider security in their products. New guidance from the U...

Until recently, developers and manufacturers of medical devices have not been required to consider security in their products. New guidance from the U.S. Food and Drug Administration (FDA) and expanded European Union requirements for personal data protection, now make security design in medical devices a necessity. While IT network attacks get most of the press, it is important to remember that physical attacks, such as accessing a maintenance serial port, can be just as dangerous.

Security experts have reported weaknesses in hospital and clinic networks for several years. Even though these networks contain extremely sensitive patient data and connect life critical equipment, they continue to be proven easy to infiltrate. While networking equipment, like routers, may be state of the art, the medical equipment on the network often has little to no security protection. Breaching a device with malware can open a backdoor to allow remote hackers access to sensitive data across the network, and what is more, cause the device to operate in a dangerous manner.

Since 2015, there has been much regulatory activity on security that affects medical device design. In the EU, the recent adoption of the new General Device Protection Regulation, which applies to all devices, has strict requirements for the protection of personal data. Additional regulations are soon to be released specifically for medical and intravenous delivery devices.

For device designers, the more specific recommendations from the FDA provide more useful guidance as to how to meet security requirements. The FDA has issued formal guidance on both premarket submissions and post market management of security in medical devices. A key item in the premarket guidance states that security hazards should be part of the risk analysis, while the post market guidance clearly refers to the need for secure software update procedures. The new post market guidance states that the FDA will, typically, not need to clear or approve medical device software changes for the purpose of only updating cybersecurity features in the field. This is to enable fast response to emerging threats. Going even further, the FDA issued a Safety Communication triggered, for the first time, by cybersecurity vulnerabilities of one type of infusion pump. This communication recommended discontinuing the use of several previously approved devices solely on the basis of vulnerability to attack.

What should be done to ensure a secure medical design

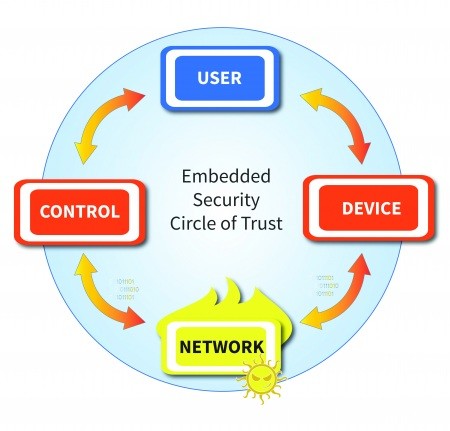

While medical equipment developers are experienced at developing systems to meet functional safety requirements, cybersecurity adds another dimension to the design process. It is advisable to consult with experts to evaluate the different tradeoffs to achieve an appropriate security level for the product. INTEGRITY Security Services (ISS), a Green Hills Software company, helps clients address FDA and EU requirements with an end-to-end embedded security design. ISS supports medical device developers in the application of the following, five rules of embedded security.

Rule 1: Communicate without trusting the network

An increasing percentage of medical devices are always connected, and many devices are required to be connected for maintenance or upgrades. While protecting patient data is critical, so are fundamental operating parameters, such as maximum dose limits on infusion pumps. Even without sensitive data, if connected on a hospital network, it can be a target for hackers to penetrate the network.

[Figure 1 | Don’t trust the network]

To prevent a security breach, it is necessary to authenticate all end-points, including the device itself, any human users interacting with the device, and any other connected systems. Secure designs should never assume users and control software are valid just because the received messages have the correct format. In the infusion pumps that were the target of the FDA’s safety communication, a hacker was able to gain access to the network, reverse engineer the protocol and send properly formed commands that would have allowed a lethal dose of medication to be administered to the patient. Authentication protects medical devices from executing commands originating from unknown sources.

Rule 2: Ensure software is not tampered with

There are many ways for malware to be injected into a device, including

- Using a hardware debug interface such as JTAG

- Accessing test and debug interfaces, such as Telnet and FTP

- Exploiting control protocols that were developed without considering security

- Simulating a software update that assumes trustworthiness without verification

System software should not be trusted until proven trustworthy. The point where authentication starts is called the root-of-trust, and for high-assurance systems, must be in either hardware, or immutable memory. A secure boot process starts at the root-of-trust and verifies the authenticity of each software layer before allowing it to execute.

[Figure 2 | Authenticate software from the root]

Secure boot verifies the source and integrity of software using digital signatures. Software is signed during release and verified each time it is loaded. This guarantees that a device is free of malware and operates to the quality it was developed. As a result, by preventing malware, secure boot prevents any targeting of the larger network – meeting new FDA guidelines that recommend that a device is able to detect and report if it has invalid software.

Rule 3: Protect critical data

Patient data, key operating parameters, and even software need to be protected not just in transit over the network, but also within the device. This is accomplished by a security design that incorporates separation and encryption to ensure only authenticated software and users have access to stored data.

Protecting data-in-transit requires that data can only be viewed by the proper endpoint. Note that standard wireless encryption does not provide secure communication: it protects the data link only, but not the data. Any other system that is able to access the wireless network is also able to view the packets in decrypted form. Data protection is accomplished through network security protocols, such as TLS, which enables secure client/server communication through mutually authenticated and uniquely encrypted sessions.

Rule 4: Secure keys reliably

Keys used for encryption and authentication must be protected, because if these keys are compromised, an attacker may uncover sensitive data or emulate a valid endpoint. For this reason, keys are isolated from untrusted software. Keys stored in non-volatile memory should always be encrypted, and only decrypted following secure boot verification. Since protection of patient data is paramount, especially for the EU, use of high assurance kernels and security modules also provide layered separation for fail-safe design.

Keys need to be protected in manufacturing and throughout the product lifecycle by an end-to-end security infrastructure. If a key is readable at any time, all of the devices using it are vulnerable. An enterprise security infrastructure protects keys and digital trust assets across distributed supply chains, but can also provide additional economic benefits beyond software update such as real-time device monitoring, counterfeit device protection, and license files to control availability of optional features.

[Figure 3 | Securing the update process]

Rule 5: Operate reliably

As all medical designers know, one of the biggest threats to a system are unknown design errors and defects that occur during the development of these complex devices. That is why Green Hills Software promotes PHASE – Principles of High-Assurance Software Engineering.

- Minimal implementation – Code should be written to perform only those functions required to avoid “spaghetti code” that is not testable or maintainable.

- Component architecture – Large software systems should be built up from components that are small enough to be easily understood and maintained; safety and security critical services should be separated from non-critical ones.

- Least privilege – Each component should be given access to only the resources (e.g. memory, communications channels, I/O devices) that it absolutely needs.

- Secure development Process – High-assurance systems, like medical devices, require a high-assurance development process; additional controls beyond those already in use, such as design tools security and secure coding standards may be needed for ensuring a secure design.

- Independent expert validation – Evaluation by an established third party provides confirmation of security claims, and is often required for certification. As with functional safety, components that have already been certified for cybersecurity are preferred as reliable building blocks in a new design.

These principles, used in the development of Green Hills Software’s INTEGRITY real-time operating system, when applied to application development will minimize the likelihood and impact of a software error or a new cybersecurity attack.

Creating an end-to-end security solution

Building a secure medical system that meets the new regulatory environment requires an end-to-end security design, which addresses the security of data and reliability within the networked device, throughout the product lifecycle. This requires a device security architecture, which ensures safe operation by ensuring that keys, certificates, and sensitive data are protected throughout operation and manufacturing supply chain by an enterprise security infrastructure. The optimum selection of both device and enterprise security solutions depends on device operating and manufacturing environments, as well as, business tradeoffs, so it is worthwhile to consult experts in the field.