Balancing interoperability and security in an M2M deployment

December 01, 2013

M2M systems incorporate an increasing number of disparate devices and connectivity options, but they must still maintain interoperability and mitigate...

It is nearly impossible to go a day without reading about the explosive growth of devices being connected to the Internet. Industry forecasters project astronomical numbers in terms of connected machines and the volume of data they will transfer. These figures pose both a significant opportunity, and considerable challenges to our industry.

In particular, this paradox exists in balancing the interoperability of disparate devices and protocols, from edge to enterprise, with the need to secure the data being passed from point-to-point. Each node along the continuum must be optimized for performance and power with minimal overhead for the “what if” scenarios created by not knowing exactly what else the device may need to communicate with. This is further complicated by different communication standards, bus protocols, and regulatory requirements. In light of all of this, designers are challenged to meet aggressive time to market requirements in an environment where future-proofing the application is at best uncertain due to lack of industry standards.

Several functions must be considered for developing M2M systems for applications that depend on interoperability. In solving interoperability challenges, security risks must also be addressed.

Interoperability: Discrete devices, one system

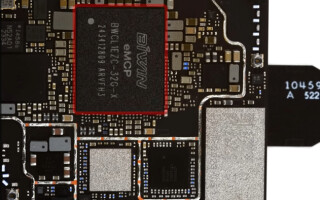

It is nearly impossible to find a single, purpose-built system employing M2M from one vendor that covers the gamut from edge to enterprise. Even a modular approach where multiple functions are integrated, such as a sensor and control unit, still requires more elements to be added to the overall solution. Figure 1 shows the basic functional elements in a typical M2M deployment.

Each use case requires a different set of hardware, software, and connectivity configurations depending on whether the asset at the edge is mobile or fixed, the distance between devices, and whether there are regulatory standards that must be adhered to. For example, a way-finding kiosk located in a shopping mall would be considered an edge device. The kiosk might contain proximity and motion sensors to trigger power up when an individual approaches the unit, along with CMOS image sensors for detecting the individual’s demographics to be used for targeted content delivery and a touch screen interface for display navigation. The kiosk may also contain additional internal environmental sensors to monitor the health of the system such as a temperature sensor for heat buildup within the enclosure. This combination of sensors is designed to work in conjunction with one another to create the user experience and efficiently connect to the balance of the overall solution.

A variety of connectivity options are available, depending on the floor plan layout at the mall and how the mall is managed and operated. The kiosk may be a standalone unit that interacts, in concert with other standalone units, with a central gateway. Alternatively, the kiosk may contain the gateway within its physical structure. The connectivity also varies depending on whether the kiosk is being hosted by the mall’s property management company or by an independent third party, such as a marketing agency, that is prohibited from connecting to the mall’s network. In addition to these considerations, the designer would have to take into account the type of content being transmitted, which in this example would be both data and video, and the modality of the transmission, which could be Wi-Fi, cellular, or Ethernet. With all of these variables to consider from the onset it is no wonder that interoperability and standards become a challenge.

Securing M2M systems

Security is also a critical consideration when reviewing the various component options in the solution. The risk is both within the device as well as between devices. If the sensors are compromised, the system is likely to fail or perform in an unexpected manner. If the sensor is unexpectedly turned off or the connection being used to transfer the data from the sensor is hacked, the data can be missing or altered, which in turn impacts how the data is analyzed and acted upon. Imagine if an image is deleted from the video being fed from a security camera to a processor designed to monitor changes in the viewable area from frame to frame. This disconnect between what the sensor (security camera) captures and the processor could allow for theft, vandalism, or undetected hazards. Designers can prevent this by using secure connections that can be established in a variety of ways. Options include creating a Virtual Private Network (VPN) with communication carriers or with a secure cloud-based application. Costs and deployment complexity are considerations when choosing from these options.

Efficiently creating an ecosystem

Taking all these criteria into account and the balance of the functional elements such as connections to cloud-based applications like analytics or storage, a developer could lose valuable time reaching the market in the evaluation process for each device option. Equally inefficient would be trying to design everything themselves from the board on up. While this would ensure each functional block was designed for a specific purpose and intentionally tied to the corresponding functional blocks, the process would be time consuming and expensive. Similarly, taking the time to test individual Commercial Off-The-Shelf (COTS) hardware items would cost precious time and require a significant resource allocation. Working with a solution provider can save development time.

A system architect should first assess their company’s core competency and ways to leverage this in order to create differentiation. They would then select a solution partner to deliver an integrated solution in terms of hardware, software, and related services for the balance of the application. Given the wide range of use cases and associated technologies able to support them, it is important to explore all architectures and levels of integration. Quite often there is more than one way to deliver a solution to market, and factors such as cost, engineering competencies, and time to market will be key to the deployment strategy.

For example, two companies seeking to deploy a digital signage solution may go about it in completely different manners. One, whose experience is creating general purpose desktop computers, would look to differentiate through hardware features such as number of screens supported or means of connectivity and use a commercially available, standard content management system software package. The other company, an Independent Software Vendor (ISV), would potentially look to deliver customized software that optimizes content management with viewer analytic software. Here a preconfigured media player would satisfy the hardware requirements and align with the company’s core competency.

A partner who has the ability to support both models gives designers flexibility and allows for internal and external resource optimization. A good partner would provide a robust set of technology options and be unbiased as to the best combination for meeting the requirements to maximize system capabilities. During the partner selection process, it is also critical to ensure the solutions integrator has domain-specific experience, which will go a long way toward future-proofing for interoperability, and has earned the necessary industry and agency certifications to address security and compliance. An example here would be in healthcare. Remote patient monitoring is a booming application within the telehealth segment of the medical market. Consider an OEM or ISV whose core competency lies in the analytics associated with patient monitoring. They will need a purpose-built appliance and wireless connections for collecting and managing the data flow. In this example they would benefit from a partner that is both ISO-13485:2003 certified for medical devices and has experience delivering Health Insurance Portability and Accountability Act (HIPAA) privacy and security-compliant solutions. Beyond these capabilities, a partner must also be in a position to explain things like the tradeoffs between securely transmitting over the public Internet as compared to creating a virtual private network for the overall application. The tradeoffs can be cost, total cost of ownership, support models, and level of security provided as compared to perceived value in the market, time to market, and opportunity for differentiation. All of these considerations come into play before determining the bill of material or component selection.

System deployment: The last piece of the puzzle

Once the design and production of the application is complete, deploying the solution in the field is where interoperability and security are most critical. Connecting the devices to the network, provisioning devices (often across multiple carriers), and effectively tunneling through firewalls are just a few of the tasks that may be needed for a successful deployment. These tactical details, optimized for the user experience, create a solution that makes the technology “invisible” to the end user and allows them to focus on the benefits the product provides. For example, people expect their wearable health monitoring device to automatically connect to Wi-Fi in the home and cellular outside of the home without disruption of service. They would not want to have to stop and change settings because of a location change. The ability to make this transparent is a key element to a successful deployment. Not only are these critical steps in the process, but they also provide opportunities for new revenue streams through services provided to the end user.

Additional features can be designed into the device itself to allow for monitoring the health or security of the unit and notification if something goes awry. Features such as temperature sensors, shock and vibration sensors, or the ability to record the amount of time the system has been running can be added and analyzed against calibration points (such as Mean Time Between Failure or MTBF) to proactively schedule routine maintenance and parts replacement. Solutions integrators can provide these services along with others such as technical call centers, warrantee repair, and installation. Figure 2 offers a blueprint for the ranges of services required to support a deployment through its life cycle.

Deploying today in the absence of tomorrow’s standards

There are as many potential solutions for deploying an M2M system as there are use cases to address. For companies seeking to capitalize on the growth potential offered by the Internet of Things (IoT), a degree of scale is needed to enable profit maximization. As the deployment of M2M models expands beyond its legacy roots in telematics, standards will start to emerge to make interoperability among the enabling elements of an M2M signal chain common across a given market segment at a minimum. Organizations like oneM2M are striving to develop these standards but, in the interim, working with a partner is the most practical, effective, and efficient way to move forward. Choosing a partner, such as the Rorke Global Solutions (RGS) unit of Avnet Embedded, who brings a wide range of technology options and different levels of integration, along with the complimentary life cycle of services to implement the solution is critical. Ensuring that partner is not predisposed to one solution versus considering all and that the partner has domain-specific experience exponentially increases the probability of success. Taking the time to assess and select the right partner will lead to faster deployment, better end customer satisfaction, and optimized revenue in the long run.

Avnet Embedded www.avnet.com

Follow: Twitter Facebook LinkedIn Blog YouTube