Why is Common Criteria Security Certification Useful and What Do the EAL Levels Mean?

April 06, 2023

Blog

Information security is one of the most important topics of our time. One thing is certain: There will always be attackers who try to exploit gaps in software to abuse it. We are currently experiencing a rapid increase in the number of cyberattacks. Malicious actors – generally professionalized criminals or state attackers – are out to obtain confidential information, gain access to systems in order to control them, or damage them to such an extent that they are no longer operational.

However, the motives for cyberattacks play no role at all (or only a subordinate one) in the Common Criteria, because actors are assigned roles that make them tangible as a threat. A distinction is made whether a user compromises a system unintentionally or whether an attacker (referred to by the Common Criteria as a threat agent) attacks a system deliberately. But this only matters insofar as these categories are considered from the perspective of the damage and the "assets to be defended" (specifically, the components of a system).

How a Common Criteria Certification Works

Consider a manufacturer (system integrator) of interactive kiosks who wants to certify a new series of kiosks. The kiosk, consisting of hardware (SoC, payment terminal, and a display), and software (the operating system, a hardware abstraction layer, and the applications for ordering goods and pay over the network), will be examined in its entirety for this purpose.

This is because anyone who certifies against Common Criteria has an entire system, or IT product/embedded system (referred to in Common Criteria as a Target of Evaluation, or TOE for short), that must be protected against damage from actors described above. This includes – but not necessarily – the hardware, which must be protected against physical misuse such as theft, manipulation, and the like; and the software, which must be evaluated according to certain paradigms. However, other security goals can also be proclaimed.

The system integrator is not alone. Another entity – usually a testing laboratory – assists with certification, and an authority – usually governmental – awards the certification.

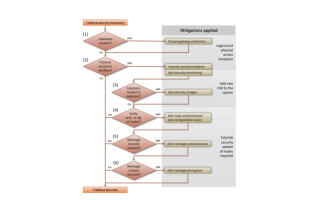

All conceivable and plausible actors involved with the system will be identified, such as the users (customers), programmers (who could possibly install a Trojan horse for whatever motives), but also people who wantonly damage the kiosk. All conceivable attacks by these actors will be named and assigned in the language of the Common Criteria. Countermeasures will be pointed out on how the damage of such attacks can be eliminated or minimized so that the components (assets) are protected. Finally, the authority – e.g., the German Federal Office for Information Security (BSI) – will verify whether the certification can be awarded, and then will award or deny it.

The Philosophy of the Common Criteria

The Common Criteria is a current and regularly maintained, generic security certification. It is designed in such a way that it can be used as generally as possible and therefore as appropriately as possible everywhere, in contrast to the specific DO-356A/ED-203A security certification, for example, which was designed for avionics systems and is only used there (i.e., in aircraft).

The Common Criteria is explicitly designed so that findings coming from IT security research can be incorporated into it, as the structure is flexible enough to be extended or modified. The flexibility also allows much of the requirements to be used for specific certifications such as the DO-356A/ED-203A.

Thus, on the one hand, it is possible to transfer already certified artifacts comparatively conveniently to new projects, no matter which specific certification is desired; and on the other hand, a system pre-certified in this way can be used as a basis for new projects. This makes sense for operating system manufacturers, since it is not clear here whether the operating system will be used in a car, a drone, an industrial robot, an exoskeleton, or a satellite.

Consider an aircraft manufacturer who wants to have their avionics system certified according to DO-356A/ED-203A, using a real-time operating system to guarantee that applications are deterministic — rudders must and will react within a certain time. In this case there are few requirements that are not covered by the Common Criteria. Indeed, Common Criteria and DO-356A/ED-203A share a total of 32 requirements that are fully or at least mostly in agreement and only 7 requirements are not applicable.

For the comparatively new ISO/SAE 21343 standard, designed for electronic systems in vehicles, there are 93 congruent or nearly congruent requirements and only 9 that are not covered by the Common Criteria. Each congruent requirement can be mapped to the corresponding requirement of a specific security certification. This is no coincidence: most specific security certifications trace their roots to the Common Criteria.

Coming back to the example of the aircraft manufacturer: in this age of government-backed hacking attacks and extortion gangs threatening to crash airplanes, cybersecurity and functional safety are now inextricably intertwined. This calls for software that is designed for safety as well as security and can demonstrate operation with integrity through the appropriate certifications.

In this case, certification against DO-356A/ED-203A and against the safety standard DO-178C is therefore necessary. Thus, a system integrator looking for a suitable foundation for their project will save a lot of time, effort, and money by choosing an operating system that is certified (or easily certifiable) against the relevant industry safety standard, and is also certified against the Common Criteria to a high enough level to cover the applicable industry-specific cybersecurity standard.

The Security Evaluation

Just like the DO-178C, which formulates safety levels from DAL D to the highest level DAL A, increasing evaluation assurance levels (EALs) provides reassurance that reasonable confidence in IT security can be placed on the certified system. However, while a more or less clear statement about safety can be made with the DO-178C, the Common Criteria levels do not directly indicate how secure a system is in the sense of the secure/unsecure dichotomy, but make a statement about the justified trust in a system.

This indirect statement of trust is based on the fact that there can be no absolute certainties in matters of IT security. While statistical (failure) probabilities justify trust for a safety level such as DAL A, because only the technical circumstances are analyzed, the human factor is decisive for the Common Criteria and for IT security in general, because we are dealing with an antagonist who, unlike technical systems, can cause a system to fail at will. A technical system may be able to fail, but it cannot do anything to cause itself to fail.

This circumstance and the philosophy of the Common Criteria are due to the fact that open calculations are made with all possibilities. This is reflected in the procedure of adopting the attacker's perspective during the evaluation and searching for vulnerabilities (depending on the level) using approaches such as flaw hypothesis methodology, pen-testing, and other measures.

Nevertheless, or precisely because of this approach, the Common Criteria provides a kind of blueprint or construction kit for designing a system from the ground up so that vulnerabilities can be avoided as far as possible and assets enjoy the best possible protection. The more effort is invested in evaluating this, the greater the confidence in a system. This is reflected in the levels as they increase.

Evaluation Assurance Levels (EAL) of the Common Criteria

If one wants to achieve one of the seven EALs, certain conditions must be met. First, the three dimensions that are important in grading an embedded system are scope, depth, and rigor.

Scope simply means how large the part of the embedded system is (you can also have only parts of an embedded system certified - the Common Criteria gives you the freedom to certify what you want to certify). The depth indicates how fine-grained the examination of the product is, how detailed the analysis is carried out. Finally, the rigor indicates how rigidly the evaluation is performed. This ranges up to formal proofs that something is secure.

According to a predefined matrix, classes are listed with subfamilies, each of which raises objectives and requirements for the embedded system to be certified. The three dimensions here are the compass for the respective level of the classes. The areas (referred to here as classes) that are consulted are Development, Guideline Documents, Lifecycle Support, Security Target Evaluation (the Security Target is the central document with which the system integrator proves its efforts), Testing, and Vulnerability Assessment.

The Test class, for example, contains the Depth (ATE_DPT) and Functional Testing (ATE_FUN) subfamilies, among others. Depth (ATE_DPT) is not to be confused with the previously mentioned dimension depth.

To reach a certain EAL, the levels of the subfamilies must (at least) reach all heights required by the respective EAL level. For example, if one wants to reach EAL 5, in the class Test, for the subfamily Depth at least the subfamily level 3 must be reached, which corresponds to a modular design while level 1 corresponds to a basic design.

For the basic design of level 1, only a (comparatively superficial) description of the safety functions (TSF) is required and that these function as described. At level 3, detailed descriptions are already required for the TSF and for each of its modules, describing (internal) functionalities and interfaces and that these function as specified. Scope, depth, and rigor are greater here. With disclosure, the Common Criteria says, confidence that errors will be avoided increases. Thus, across all classes, certain sub-goals must be met in order to achieve a given level.

EAL 3 or 5 – What's the Difference and What Do Plus Symbols Mean?

If you take for example a real-time operating system such as PikeOS, which holds a current and valid certification and was previously certified at a lower level, you can see the differences: The previous certification of the PikeOS Separation Kernel was at the level EAL 3+ (the plus sign indicates that in addition to all the levels of the subfamilies that had to be achieved, additional evaluation took place with optional classes that seemed meaningful in the context of the embedded system).

EAL 3 indicates that the embedded system has been methodically tested and verified. This is understood to include a complete security target in which the security functional requirements (SFR) have been analyzed, a description of the security architecture of the embedded system to understand its functionality, interface descriptions, and guidance documents.

Functional, pen testing, and vulnerability analyses are also necessary to meet further requirements, such as configuration management of the embedded system and proof of secure delivery procedures. The Common Criteria speaks of an overall moderate security level for developers and users, which can be independently confirmed.

The Separation Kernel of PikeOS has now reached EAL 5+ in version 5.1.3. This marks some differences: Among other things, this level provides that, in addition to the above-mentioned requirements, modular security feature design (TSF, TOE Security Functions) is added for the first time.

The TSFs comprise the hardware, software, and firmware (i.e., the hardware abstraction layer, which can be the BIOS or board support packages), which must meet the described requirements (SFRs). The biggest difference is that the embedded system has not only been methodically tested and checked, but has been developed in a more structured way and is therefore more analyzable, and a semi-formal description exists.

In the case of PikeOS, the plus sign ( + ) indicates that, in addition to all requirements at the EAL 5 level, the optional classes AVA_VAN (Vulnerability Analysis), ADV_IMP (Implementation Representation), ALC_DVS (Development Security), ALC_CMC (CM Capabilities) (among others) have also been achieved at the maximum level EAL 7. In other words, PikeOS has EAL 7 level in some points. Especially for the Vulnerability Analysis class, this is a strong added value for system integrators, because it has been proven that remaining vulnerabilities of the TOE are only exploitable by attackers with the attack potential of (in Common Criteria language) "beyond high."

This is the highest possible rating. It is based on a score determined by factors such as the time required for identification and exploitation (Elapsed Time), the technical expertise required, which includes not only certain knowledge but also the number of attackers (Expertise), knowledge of the design and operation of the TOE (Knowledge of TOE), the "window of opportunity," and the equipment required, such as IT hardware/software or other tools required for exploitation (Equipment).

In addition, EAL 5 requires comprehensive configuration management of the embedded system, including a certain degree of automation, as well as a complete interface description. The Common Criteria assures that a product developed in this way (unlike EAL 3) can withstand not only attacks at a basic level, but moderate attack potential.

Since PikeOS is by its very nature a hard real-time capable separation kernel that safely and securely separates applications in space and time (i.e., in partitions and flows), theoretically an attacker who has managed to gain access to one partition cannot gain access to another partition. He also cannot cause another privilege escalation, but in this case would only have access to this single application. Even this is very unlikely, however, since there is very strict rights management due to the required and implemented security features and mitigation techniques such as secure boot or a hardware-software chain of trust.

At the EAL 5 level, a certain level of security engineering techniques is also required of the system integrator for the first time, but the development costs — as the Common Criteria states — are not unreasonable. What this means for the final product can only be determined more precisely after a prior examination of the requirements. However, the Common Criteria also states that intensive efforts to create a secure product result in high security requirements being met and that this is independently confirmed.

EAL 5 (and in the case of PikeOS, even EAL 5+) thus occupies a sweet spot between security and cost-effectiveness and, with its rich range of functions, offers the possibility of creating modern, networked and secure embedded systems.