Growth in mobile and cloud-based speech recognition fueling embedded speech technologies

December 01, 2011

Improvements in embedded speech technology yield a five-step Voice User Interface (VUI) capable of hands-free, eyes-free voice recognition.

Many of the largest players in speech technology today are also heavyweights in the mobile phone Operating Systems (OS) market. Microsoft was the first of the software/mobile OS giants to build a speech team. In the early 1990s, Bill Gates preached the benefits of Voice User Interfaces (VUIs) and predicted they would play a role in human interfacing on computers. Google got aggressive by building an elite team of speech technologists early in the 21st century and spurred the mobile industry toward speech interfaces and voice control with its Android release. Apple has always been king of the user experience and, until recently, avoided pushing speech technology because of challenges in accuracy. However, with the acquisition of Siri (a voice concierge service) and incorporation of the company’s technology into the iPhone 4S, Apple could be ushering in a new generation of natural language user experiences through voice.

Speech technology has become critical to the mobile industry for a variety of reasons, primarily because it’s easier to speak than type and because the mobile phone form factor is built around talking more so than typing. Additionally, with the enormous revenue potential of mobile search, mobile OS providers are seeing the value of adding voice recognition to their technology portfolios.

Why embedded?

Much of the heavy lifting for VUIs is performed in the cloud. That’s where most of the investment from the big OS players has gone. The cloud offers an environment with virtually unlimited MIPS and memory – two essentials for advanced voice search processing. With this growth of cloud-based speech technology usage, a similar trend appears to be following in the embedded realm.

Embedded speech is the only solution that enables speech control and input when access to the cloud is unavailable – a necessary feature to add to the user experience. Embedded speech also has the ability to consume less MIPS and memory, thus increasing the efficiency of a device’s battery power.

The optimal scenario for client/cloud speech usage entails voice activation on the client, with the heavy lifting of deciphering text and meaning on the cloud. This can enable a scenario where the device is always on and always listening, so a voice command can be given and executed without having to press a button on the client. This paradigm of “no hands or eyes necessary” is particularly useful in the car for safety purposes and at home for convenience’ sake.

For example, in the recently introduced Galaxy SII Android phone, Samsung’s Voice Talk utilizes Sensory’s TrulyHandsfree Voice Control, an embedded speech technology, to activate the phone with the words “Hey Galaxy.” This phrase calls up the Vlingo cloud-based recognition service that allows the user to give commands and input text without touching the phone.

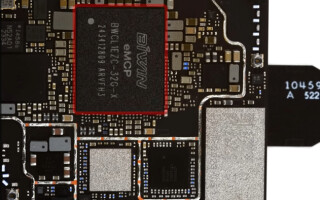

Speech recognition can be implemented on devices with as little as 10 MIPS and tens of thousands of bytes of memory. Sensory’s line of speech chips includes RISC single chips based on 8-bit microcontrollers and natural language processors that utilize small embedded DSPs (see Figure 1). In general, the more MIPS and memory thrown at speech recognition, the more capabilities (faster response times, larger vocabularies, and more complex grammar) a product can have.

The general approaches to speech recognition are similar no matter what platform implements the tasks. Statistical approaches like hidden Markov modeling and neural networks have been the primary methods for speech recognition for a number of years. Moving from the client to the cloud allows statistical language modeling and more complex techniques to be deployed.

The VUI stages

To create a truly hands-free, eyes-free user experience, several technology stages must be addressed (see Figure 2).

Stage 1: Voice activation

This essentially is replacing the button press. The recognizer needs to be always on, ready to call Stage 2 into operation, and able to activate in very noisy situations. Another key criterion for this first stage is a very fast response time. Given that delays of more than a few hundred milliseconds can generate accuracy issues caused by users speaking to Stage 2 before the recognizer is listening, the response time of the voice activation must be the same as the response time of a button, which is near instantaneous. Simple command and control functions can be embedded in the client by the Stage 1 recognition system or a more complex Stage 2 system, which could be embedded or cloud-based.

Stage 2: Speech recognition and transcription

The more power-hungry and powerful Stage 2 recognizer translates what is spoken into text. If the purpose is text messaging or voice dialing, the process can stop here. If the user wants a question answered or data accessed, the system moves on to Stage 3. Because the Stage 1 recognizer can respond in high noise, it can drop volume in the car radio or home AV to assist in Stage 2 recognition.

Stage 3: Intent and meaning

This is probably the biggest challenge in the process. The text is accurately translated, but what does it mean? For example, what is the desired query for an Internet search? Today’s “intelligence” might try to modify the search to better fit what it thinks the user wants. However, computers are remarkably bad at figuring out intent. Apple’s Siri intelligent assistant, developed under the DoD-funded CALO project involving more than 300 researchers, might be today’s best example of intelligent interpretation.

Stage 4: Data search and query

Searching through data and finding the correct results can be straightforward or complex depending on the query. Mapping data and directions can be reliable because the grammar is well understood with a clear goal of a map search. With Google and other search providers pouring money and time into data search functionality, this stage will continue to improve.

Stage 5: Voice response

A voice response to queries is a nice alternative to a display response, which can cause drivers to take their eyes off the road or cause inconvenience in the home. Today’s state-of-the-art text-to-speech systems are highly intelligible and have progressed to sound more natural than previous automated voice systems.

Why has it taken so long for embedded recognizers to replace buttons at Stage 1?

Speech recognition has traditionally required button-push activation rather than voice activation. The main reason for this is that buttons, although distracting, are reliable and responsive, even in noisy environments. These types of environments, such as a car or a busy home, can be challenging for speech recognizers. A voice-activated word must create a response in a car (with windows down, radios on, and road noise) or in a home (with babies crying, music or TV on, and appliances running) without the user having to work for it. Thus, until recently, speech technologies have only been reliable when users are in a quiet environment with the mic close to their mouths.

The requirement of a speedy response time further complicates this challenge. Speech recognizers often need hundreds of milliseconds just to determine if the user is done talking before starting to process the speech. This time delay might be acceptable from a recognition system to yield an answer or reply to the consumer. However, at Stage 1, the response of the activation is calling up another more sophisticated recognizer at Stage 2, and consumers will not accept a delay lasting much more than the time it takes to press a button. The longer the delay, the more likely a recognition failure occurs at Stage 2 because users might start talking before the Stage 2 recognizer is ready to listen.

Recent advances in embedded speech technology such as Sensory’s TrulyHandsfree Voice Interface provide true VUIs without the need to touch devices. These technologies have eliminated the issues inherent within noisy environments as well as long response times, making voice activation feasible, accurate, and more convenient.

The future of speech in consumer electronics

Many years ago, TV viewers had to get up and walk over to their units to change the channel. The arrival of the remote control put an end to all this, and today nobody would buy a TV without a remote. Nevertheless, we still get up and walk over to most of our computing devices to use them. As speech recognition improves, this will no longer be necessary.

The rapidly emerging usage of hands-free devices with voice triggers will progress into intelligent devices that listen to what we say and decide when it’s appropriate to go from the client to the cloud. They’ll also decide when and how to respond, potentially evolving into assistants that sit in the background listening to everything and deciding when to offer assistance.

Sensory, Inc. 408-625-3300 [email protected] LinkedIn: www.linkedin.com/company/sensory-inc. Twitter: @SensoryInc www.sensoryinc.com