How Six Companies Are Using AI to Accelerate Automotive Technology

July 08, 2020

Story

AI is not only used for path planning and obstacle avoidance, but incorporated in every step of development from modeling how systems will perform on the road to gathering parts for manufacturing.

The automotive sector has adopted a great number of AI innovations that are not only utilized for vehicle path planning and obstacle avoidance, but incorporated in every step of development from gathering parts for manufacturing, to testing software, to modeling how these systems will function on the road.

For example, BMW Group is one company making use of AI in their factories with tools like the NVIDIA Isaac Robotics 2020.1 platform, a software “toolbox” comprised of the Isaac SDK and Isaac Sim that accelerates the development and deployment of deep learning-enabled machines.

According to Hanns Huber of the Communications Production Network at BMW Group, the German automaker was forced to investigate the use of AI technology because their annual car sales have doubled in the past decade, and each of the car models they manufacture comes with an average of 100 different options. To support this demand, millions of car parts are imported to their factories on a daily basis.

In order to synchronize all of these variables much more accurately and efficiently, BMW employs AI-powered Smart Transport Robots (STR) logistics robots (Figure 1). STRs utilize a number of deep neural networks for functions such as perception, segmentation, and human pose estimation to help them make sense of their environment, all of which are modeled within the Isaac Robotics environment.

BMW uses these STRs, along with four other material handling robots (PlaceBot, Split Bot, Pick Bot, and Sort Bot), to assemble custom vehicles on the production line, identify objects such as forklifts and humans, and autonomously transport materials throughout their factories (Figure 2). The robots are also able to learn from their environment and respond differently to the people and objects they encounter.

According to NVIDIA’s David Pinto, Isaac is “helping companies like BMW Group address logistical challenges with an end-to-end system: comprehensive tools, libraries, reference applications, and pre-trained deep neural networks.”

Pinto continued to explain that Isaac helps BMW simplify the deployment and management of AI-driven features like perception, navigation, and manipulation that bring their autonomous logistics robots to life. The Isaac platform value proposition to a company like BMW includes:

- Isaac is a standardized reusable platform for all robotics and autonomous systems.

- Isaac reduces development, testing, and deployment cycle time.

- Isaac provides control over the development pace and roadmap without depending on a third party.

- Isaac reduces the overall cost of robotics hardware and software.

- Isaac offers GPU-accelerated computing at the edge that enables more compute-intensive features to enhance robot capabilities.

Huber confirmed the last point, stating that “the AGX Xavier has been deployed in BMW Group logistics robots.”

“The BMW Group Logistics innovation team is also using the EGX platform to train our robots on the simulation before deploying them in our facilities,” he added.

AI: The Developer’s Assistant

Of course, systems like automotive manufacturing robots must be thoroughly tested to ensure that they meet safety and security standards. And as it turns out, AI and ML are being used to help automate the testing of increasingly complex software stacks as well.

Parasoft’s C/C++test software test automation product is currently being used in the automotive sector to minimize the countless hours traditionally spent analyzing complex test vectors. In use, the C/C++test automation tool assists test engineers by reviewing thousands of lines of code for violations and learning about the decisions a programmer made during development. After assessing any issues, a machine learning engine prioritizes the most significant violations and finds potential solutions for them (Figure 3).

According to Ricardo Camacho, Product Marketing Manager at Parasoft, “the latest version of Parasoft C/C++test introduced the first capability in this area, leveraging techniques from our deep code analysis to generate the data needed to train the engine how to exercise the code and achieve the desired coverage.”

It is important to note that the use of AI and ML in Parasoft’s test automation is only designed to assist the developer, and does not make any final decisions. In fact, some organizations have even turned to regression testing to ensure the AI software is not able to make decisions on its own and does not focus on areas outside of the primary design.

As Camacho points out, this application of the technology is still in its infancy despite its massive potential.

“There will need to be a change in how we determine the pass/fail status of a test. And how we measure completeness of testing when the behavior is designed to change over time,” he said.

Additional Eyes on the Road

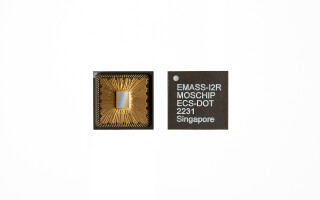

In addition to assisting with vehicle development and manufacturing, AI and machine learning are also keeping an eye on currently deployed systems. For instance, UltraSoC collaborated with PDF Solutions earlier this year on an analytics technology that helps identify and prevent automotive chip failures before they happen.

In action, the PDF Solutions platform houses origin records for chips in a deployed vehicle that are outfitted with UltraSoC’s hardware-based “smart” behavior monitors. The PDF Solutions platform stores everything about these electronic components, including the PCB they are mounted on, how they are positioned, where and when they were manufactured, and so on.

UltraSoC CAN and Bus Sentinels continuously watch transactions that occur over the internal buses or network on a chip (NoC) of these automotive semiconductors to analyze how a chip’s functional blocks perform over time. This analysis includes inspecting instructions carried out by the CPU, as well as probing the behavior of custom logic. The hardware behavior monitors can also be programmed to check for specific conditions, such as an increase or decrease in the number of transactions between two blocks, changes in latency, attempted access to unauthorized areas of the chip, and any other abnormal behavior patterns.

These findings are relayed to the PDF Solutions machine learning platform, which is able to use historical part data to determine whether a given component is faulty and alert developers of devices that are not functioning properly. System designers/manufacturers can then take action ranging from over-the-air firmware updates to recalls that can reduce costs, increase safety, and protect a brand’s reputation.

The UltraSoC Sentinel can also be configured as on-chip security modules that instantaneously block suspicious transactions over a chip’s internal buses.

Beyond reacting to phenomena occurring in deployed vehicles, however, the true value of feedback loops enabled by technologies like UltraSoC’s hardware monitors lies in the ability to inform future designs. For this reason, Siemens recently acquired UltraSoC and will integrate the organization into the Mentor Tessent portfolio of test, debug, and yield analysis offerings.

Accelerating Simulation and Modeling

As vehicles become more complex, so too do the simulation and modeling techniques needed to design them. For instance, today’s automotive simulation and modeling tools must be able to generate high-fidelity driving scenarios that place precise representations of an entire vehicle into interactive, 3D environments that mimic the real world.

According to David Fritz, Senior Autonomous Vehicle SoC Leader at Mentor, advances in these tools have helped reduce the percentage of potential bugs found later in the development cycle. However, modeling variables and driving scenarios that accurately represent the physics of the real world is so complex that simulations are often unable to replicate exactly how a real car would function in certain conditions. As a result, it’s still difficult for automotive engineers to determine how a vehicle actually performs before the end of the design cycle when it is realized in physical hardware.

In an effort to bring pre-silicon testing closer to reality, Siemens has developed PAVE360, a comprehensive automotive validation environment that leverages the company’s physics-based Simcenter Prescan simulation tool and digital twin technology. The digital twin component of the platform accepts CAD design files that provide a precise virtual representation of every component within a vehicle, right down to the chassis design, control subsystems, safety sensors, and semiconductor IP.

The platform can be used to model and test deterministic and AI-based self-driving technologies (Video 1).

When leveraging the PAVE360 platform, automotive engineers use Simcenter Prescan to generate physics-based environments in which various driving scenarios can be simulated. Prescan supports scenarios with the typical actors and objects seen on the road, such as other vehicles, animals, pedestrians, cyclists, etc. These virtual actors are programmed to behave as their physical counterparts would to create scenarios that are realistic and dynamic.

When running a simulation, sensor data is passed through virtual integrated circuits of the vehicle’s digital twin, and then onto modeled ECUs that run production software stacks. This control software reacts to the simulated sensor data by sending commands to vehicle domains such as the transmission, steering, and brakes. Virtual control sensors and actuators then execute these commands, completing the response cycle.

The platform can be used to model the interplay between various subsystems and real-world environments, across multiple use cases, and at varying levels of fidelity. It’s difficult to imagine a more realistic representation of road performance without having an actual test vehicle (Video 2).

Fritz and his team are currently working on scenarios that give a self-driving vehicle’s onboard AI full decision-making power – for example, in choosing whether to brake or veer out of a lane to avoid an accident. The challenge here is modeling how the AI’s decisions affect the behavior of actors and objects around the vehicle, and vice-versa.

Similar problems are being addressed by engineers at MathWorks, who are deploying AI to recreate various driving scenarios from pre-recorded sensor data gathered during real test drives. Avinash Nehemiah, Principal Product Manager of Autonomous Systems at the company explained that this allows high-fidelity simulation models to be approximated so that they can run more quickly, and also permits a broader range of test cases than you could generate in the real world (Figure 4).

“AI can be used to detect and extract the positions of surrounding vehicles from the camera and lidar data. This information, combined with GPS data, is used to reproduce the traffic conditions in a simulated environment,” said Nehemiah. “Creating this virtual scenario allows engineers to vary aspects to the scenario like the speed and trajectories of surrounding vehicles to create test case variations that would be difficult to reproduce in the real-world.”

Nehemiah went on to cite camera-based vehicle detection, vehicle classification using lidar point clouds, and leveraging generative adversarial networks to convert recorded daytime video to nighttime video as examples of such workflows.

The MATLAB R2020a release added support for lidar sensor outputs that can be leveraged in the simulation of high-level driving automation systems. The latest release also added support for single shot detectors (SSDs) – which are used to detect vehicles, pedestrians, and lane markers – to GPU Coder, and improved the ability to target the NVIDIA Drive platform using the GPU code generation tool.

Putting AI in Drive

BMW, NVIDIA, Parasoft, UltraSoc, Siemens, and MathWorks are all utilizing different AI capabilities to help advance the automotive technology sector. In doing so, they are able to achieve cost efficiencies, power savings, increased safety, and faster development.

From simulating next-generation systems to assembling them on the manufacturing floor, automotive AI is already in “drive.”