ML-EXray: A Cloud-to-Edge Deployment Validation Framework

March 03, 2022

Blog

The increasing deployment of embedded AI and ML at the edge has certainly introduced new performance variations from cloud to edge. Despite the abrupt negative change in AI execution performance on the edge device, the adoption of TinyML is a way to move forward.

The key challenges in the process are to identify the potential issues during the edge deployment and less visibility in the ML inference execution. To solve this, a group of researchers from Stanford University proposed an end-to-end framework that provides visibility into layer-level ML execution and analyzes the cloud-to-edge deployment issues.

ML-EXray, a cloud-to-edge deployment validation framework is designed to scan model execution in edge ML applications by logging intermediate outputs and provide replay of the same data using a reference pipeline. Additionally, it also compares the performance difference and per-layer output discrepancies, enabling users to custom functions to verify the model behaviors.

The results of ML-EXray show that the framework is capable of identifying issues like pre-processing bugs, quantization issues, suboptimal kernels, etc. With less than 15 lines of code, ML-EXray can examine the edge deployment pipeline and correct the model performance by up to 30%. Moreover, the framework also guides the operator to optimize the kernel execution latency by two orders of magnitude.

[Image Credit: Research Paper]

The debugging framework system consists of three parts:

-

Cross-platform API for instrumentation and logging for the ML inference at the edge and cloud

-

Reference pipeline for data playback and establishing baselines

-

A deployment validation framework to detect the problems and analyze the root cause

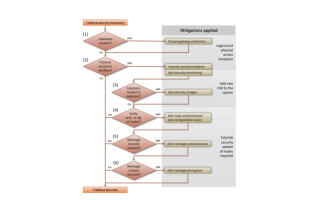

For custom logs and user-defined verification, ML-EXray provides an interface to write custom assertion functions. The generic deployment validation flowchart is simple to understand as the ML-EXray takes the logs from instrumented application and reference pipelines. The dataset is used to train the applications before the framework is applied.

Once the framework is applied on both the pipelines, accuracy match is performed, which checks for accuracy drop and scrutinizes the layer-level details to locate the discrepancy. Once the detection is completed, the assertion function is registered for root-cause analysis.

The evaluation table posted by the researchers show the tasks, models, and assertions involved in the process. The framework is applied to a wide range of tasks to identify deployment issues across multiple dimensions including input processing, quantization, and system performance. Also, the line of code for pre-processing debug target was achieved to be four LoC (line of code), compared to 25 without ML-EXray. Before closing the discussion on the new methodology and an optimized debugging framework, let us rewind the propositions of the research.

[Image Credit: Research Paper]

To summarize the innovations brought in through the ML-EXray to the edge AI deployment in identifying malfunctions and processing errors:

-

A suite of instrumentation APIs and Python library offering visibility into layer-level details on the edge devices for mission critical applications.

-

An end-to-end edge deployment validation framework giving users an interface to design custom functions for verification and examination.

-

ML-EXray is designed to detect various deployment issues in industrial setup that contribute to the degradation of ML execution performance.

For more details on the methodology of the research, visit the open-sourced research paper.