The Path to AI at the Edge

February 22, 2021

Blog

We’ve been hearing about the benefits of Edge computing and the ability to perform AI at that Edge for quite some time.

This is a technology that many refer to as AIoT. With so much to gain by this unique technology, you’d think it would be omni-present. But there’s a reason that’s not the case, at least not yet. It’s because there are many challenges that need to be solved and frankly, it’s not so easy to do.

The key difference between AI at the Edge versus the AI in the Cloud is that the Edge is where the data is generated and where the action takes place, should an action be required. Edge-based AI is for applications that simply can’t afford the time to wait for data to go back and forth to the Cloud. In general, the closer you are to the Edge, the faster the response you’re going to get. 5G makes this situation more tenable, but it may still not be fast enough for things like autonomous drive or medical devices in some cases.

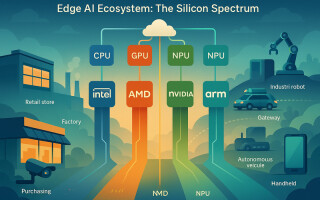

For designers of Edge AI platforms, the recent glut of processor choices has made the decision more difficult. You have to choose between CPUs (central processing units), GPUs (graphical processing units), and VPUs (vision processing units). And now, Google has added TPUs (Tensor processing units) to the mix.

CPUs are good at handling sequential heavy-duty operations, while GPUs are more adept at dealing with smaller tasks in parallel. It’s vital that these resources operate in sync. Hence, the best solution is often a combination of CPUs and GPUs for AIoT equipment housed at the Edge.

As Zane Tsai, director of platform product center at ADLINK Technology states in a recent podcast, there are so many factors involved, there is no “one size fits all” solution; not even close. “Look at the algorithms you need to run, the performance that’s needed, like how fast do you need a response, and your design budget,” says Tsai. “The answers to those questions will help determine which solution is right for your design.”

Finding the right combination for your specific application requires lots of trial and error, and vendors are now gaining the knowledge to implement these features and functions. ADLINK, with its growing database and vast set of acquired knowledge, is at the forefront of this technology. The company understands how the different processor combinations interact, especially when running the networking, modeling, or the deeply embedded AI algorithms required for Edge AI applications. It should be noted that it’s critical to get this right, as errors can potentially result in bodily harm or significant revenue loss in applications that involve heavy machinery and/or automation and manufacturing.