Greening the AI/ML Data Center

May 31, 2024

Blog

Next-gen AECs reduce energy consumption and operating expenses while supporting faster data center performance.

Over the next few years, AI/ML data centers must overcome three challenges simultaneously, deliver better performance to meet soaring demand, contain costs while expanding in size and complexity, and continue to increase energy efficiency. Pulling off that trifecta will require technological advances in nearly every part of the data center, including the cables interconnecting AI accelerators, switches, pooled memory systems, smart NICs, and servers.

Data centers have rapidly become essential to daily life and are the backbone of the digital revolution. They power next-gen AI/ML applications that are revolutionizing the economy, and they enable modern conveniences such as streaming real-time videos to our smartphones. Demand for the full range of data center services keeps rising—from cloud service providers’ increasing need for large language model (LLM) training and inference processing to consumers’ never-ending appetite for social media, video streaming, video conferencing, online gaming, and other digital services.

While keeping pace with escalating demand, hyperscalers and enterprises also need data centers to achieve the highest energy efficiency possible, both to contain energy costs and to reduce their carbon footprints. According to the Department of Energy, data centers account for roughly 2% of total U.S. electricity usage, consuming 10 to 50 times more energy per floor space than a typical commercial office building. Data centers and data transmission networks are already responsible for 1% of energy-related GHG emissions, and it is estimated that figure could rise to 8% by 2030.

IT equipment and the cooling system consume up to 90% of the energy in a data center, so naturally there is a significant focus on reducing the power consumption of network devices in a data center rack, including servers, switches, accelerators, and storage systems. However, there is an associated but less-recognized device that is becoming increasingly critical to reducing data center energy consumption while meeting performance demands and minimizing operating expenses and carbon footprint, active electrical cables (AECs).

Data center evolution and AECs

Today’s data centers largely rely on 400 gigabit (400G) Ethernet network devices that are not fast enough to meet future AI/ML workloads. As a result, data center operators are ramping up the speed of their networks, from 400G to 800G, and soon to 1.6T Ethernet.

The passive copper cables (direct attached cables or DACs) commonly used for 400G cannot support the future requirements of in-rack interconnect. As speeds increase to support 800G transmission, signal loss over copper wiring in DACs is significant, reducing the cable length that can be supported. The cable length can be extended using a thicker copper gauge, but that causes the overall DAC to become too thick. It is evident that copper has reached its physical limits – it is too thick and bulky, and it can’t support the cable reach required for in-rack interconnect use cases.

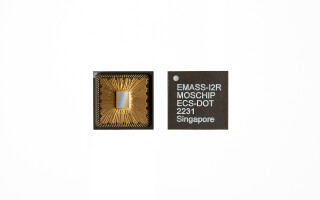

AECs provide a viable alternative to support the interconnect needs of next-generation, high-performance data centers. AECs contain a silicon system-on-chip (SoC) inside the cable assembly, which recovers high-speed signal loss over copper wiring to improve performance and reliability with data transmission. In addition to offering more intelligence than passive DACs, AECs extend cable reach up to seven meters for in-rack and adjacent rack connectivity. AECs are also able to use smaller copper wire gauge to reduce cable bulk and weight. Lowering cable bulk also reduces cable congestion in racks, which improves airflow and leads to lower energy required for cooling. Given these advantages, AECs are critical to the future of reliable cost-efficient data center operation.

The value of selecting the right electronics for low-power AECs

While AECs incorporating an SoC can extend cable reach and reduce cable volume, the additional electronics in the cable will consume more power. Adopting the right SoC for lowest-power AECs will lead to savings in several areas. First, and most obviously, low-power AECs reduce the power consumption of the cable device itself, resulting in lower power consumption costs based on the price of power per kilowatt-hour.

Consider, for example, the latest AI/ML GPU rack designs, which might have 60 active cables connecting GPU accelerators to switch fabrics and memory subsystems. As these rack designs transition to 800G speeds, the active cable becomes a significant consumer of energy for the system. A typical 800G AEC incorporating PAM4 DSP will consume about 20W, while a purpose-built mixed-signal interconnect SoC will consume only 11W. That 45% (9W) reduction substantially lowers the energy requirements for the AI/ML GPU rack.

A further advantage of low-power AECs is that they reduce infrastructure energy demands including energy from the data center’s cooling system, power distribution unit, lighting, and other equipment required to sustain the IT equipment operation. Any power saved from the IT equipment, such as AEC, also saves the infrastructure energy needed. These savings can be calculated using the power usage efficiency (PUE) metric used by data center operators, where PUE equals total facility energy usage divided by IT equipment energy usage.

According to the Uptime Institute, the average PUE for data centers in 2023 was 1.58. This means an additional 0.58W of infrastructure energy is required for 1W used by IT equipment – and for every watt of IT equipment power saved, the same 0.58W of infrastructure energy is saved. Consider the example of the 800G AEC above, in addition to the 9W of power saved by using a purpose-built SoC, an additional 5.2W (9W x 0.58) of infrastructure energy will be saved.

Conclusion

For AI/ML data centers, active electrical cables that connect GPU accelerators, network switches, and memory systems within the same rack and adjacent racks are becoming a significant part of the power equation. AECs based on purpose-built, mixed-signal SoCs provide a thinner, lighter, and longer reach than DACs, while also improving energy efficiency and cost when compared to AECs built with the typical PAM4 DSP.

As AECs become critical infrastructure, selecting the SoC that delivers the lowest power consumption will enable hyperscalers and enterprises to scale their installation performance while improving energy efficiency and reliability. Reducing operational costs will also provide AI/ML data centers with greater sustainability and a greener footprint in the years to come.

For more information, visit point2tech.com.