Synaptics and Google Partner on Open, Low-Power Endpoint AI

October 15, 2025

Blog

Synaptics and Google have joined forces to hasten the development of low-power, AI-driven edge devices—marking a potentially significant milestone for open, efficient, and scalable compute architectures. The collaboration brings together Google Research’s new Coral machine-learning accelerator architecture and Synaptics’ deep expertise in low-power silicon design, resulting in the company’s SL2610 platform.

According to the companies, the partnership helps realize a shared goal, which is to democratize AI at the outer Edge, often referred to as the endpoint. This is achieved using open hardware, open software, and open standards.

Google’s role in this partnership originates from its Systems Research division, which explores novel compute architectures capable of enabling new product experiences across the company. While Google itself won’t be manufacturing silicon anytime soon, it’s not uncommon for the company to partner with vendors like Synaptics to help turn its ideas into tangible products.

“For this architecture, we were looking for a partner with depth in low-power design, a strong IP portfolio, and a genuine enthusiasm for open standards,” explained Billy Rutledge, Director of Engineering for Systems Research at Google Research. “Synaptics checked all those boxes.”

The two companies are co-developing the next generation of embedded neural-processing technology built on Google’s Coral NPU, which is a RISC-V-based architecture designed for sub-10-mW inference workloads. The result will be a family of ultra-low-power processors capable of running modern AI models locally, efficiently, and securely.

The Next Phase of AI Acceleration

The Coral NPU represents Google’s next step in on-device AI acceleration. It builds upon lessons learned from earlier initiatives at the company, which demonstrated the feasibility of running neural networks on small devices. While those earlier projects targeted IoT and embedded systems, Coral pushes further into MCU-class and wearable devices.

The Coral NPU is built on the RISC-V specification, hence the “openness.” It includes a four-stage scalar CPU core for standardized programmability, a 32-bit RISC-V vector engine for performance scaling, and a matrix-core accelerator for modern transformer models. The architecture is modular and configurable, allowing partners like Synaptics to tailor it to their own product lines.

Addressing the Fragmentation Tax

One of the major pain points for developers working in embedded AI is what Google calls the “fragmentation tax.” Today’s ecosystem is scattered across dozens of proprietary toolchains and hardware-specific compilers, making it nearly impossible to deploy one AI model across different platforms consistently.

Rutledge explained: “If you build a model in TensorFlow or PyTorch and try to deploy it on 15 different SoCs, you’ll need 15 different toolchains. You’ll get 15 different results, and that’s not easily scalable.”

By adopting a standardized RISC-V instruction set and open compiler framework, the Coral platform eliminates this barrier. Developers will be able to compile models from TensorFlow, PyTorch, JAX, or ONNX to run on any compatible NPU without a proprietary lock-in.

Result of Partnership: the Synaptics’ SL2610

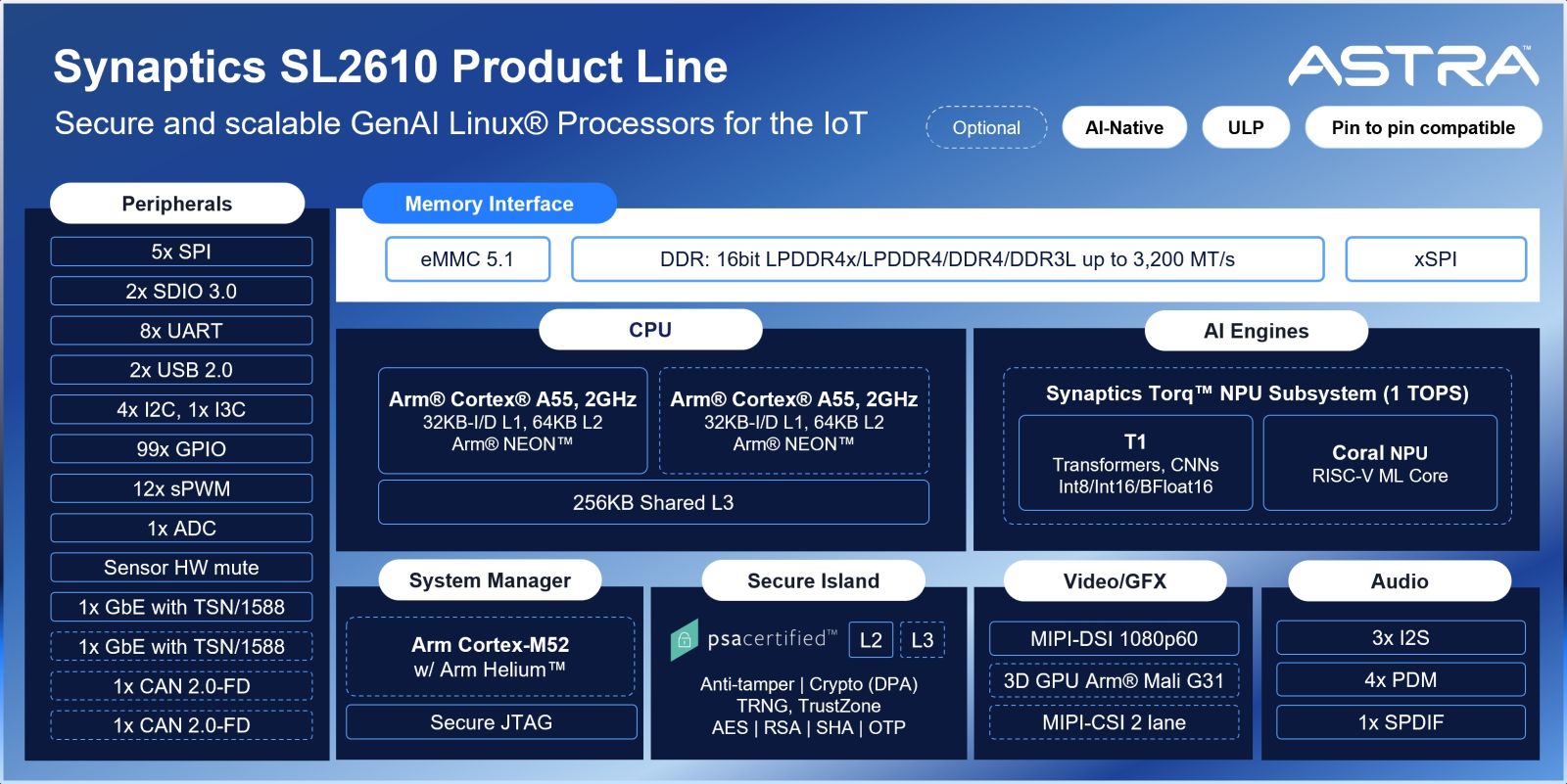

Synaptics’ first product to integrate the Google Coral architecture is the upcoming SL2610, a flagship platform that demonstrates how the new AI subsystem can be applied in real-world silicon. The SL2610 is part of a broader SL26xx family that spans multiple performance tiers and markets, from consumer wearables to industrial systems. According to the company, the SL2610 integrates two complementary AI engines: the CSS block, which is based on Google’s Coral NPU core, for flexible, programmable ML acceleration, and the NAS block, a Synaptics-designed fixed-function engine optimized for convolutional and transformer workloads.

Together, these deliver 1 TOPS of AI compute within a low-power envelope. “The beauty of this collaboration is how extensible it is,” said Nebu Philips, a Senior Director at Synaptics. “The Coral design lets us combine our own acceleration blocks with Google’s open IP, so we can tailor the performance, power, and cost balance for each market segment. This isn’t a one-and-done relationship; rather, it’s a multi-generational partnership.”

Beyond AI, the SL2610 is feature-rich: it supports dual CAN and dual Gigabit Ethernet, TSN networking, an integrated Cortex-M52 MCU for secure boot and power management, and PSA Level 2 and Level 3 security certification. It also includes advanced graphics, video, and display pipelines, making it suitable for both industrial and consumer designs.

Adding CHERI On Top

Security is another cornerstone of the project. In addition to what was mentioned above, Google is integrating CHERI (Capability Hardware Enhanced RISC Instructions), a fine-grained memory protection extension for RISC-V, into future iterations of the Coral architecture. CHERI offers memory-level compartmentalization and isolation, mitigating entire classes of software vulnerabilities.

“We’ve been evolving CHERI for over a decade,” said Rutledge. “As these devices become more personal, listening to what you say, watching what you do, etc., it’s critical to ensure that user data remains secure at the hardware level.”

What’s In It For the Partners

For Google, the motivation behind this collaboration is obviously not about selling chips, but about expanding the reach of Google experiences to billions of Edge devices. The more AI-capable wearables, IoT sensors, and smart accessories there are, the greater the opportunity to connect users to Google’s cloud services.

For Synaptics, the partnership provides an opportunity to lead the next wave of low-power AI innovation with Google-grade technology inside. The SL2610 and its successors position the company at the forefront of open, secure, and scalable edge AI.