Edge Management: The Next Big IoT Challenge

April 19, 2021

Story

You now have an application to handle edge/IoT sensor data, make decisions locally, and actuate at the edge. You can even get the data back to your enterprise or cloud safely and affordably with that edge application.

Great! Ah – but now there are looming questions that sit between you and your organization’s victory celebration: How do you get the application to the edge? How do you deploy and orchestrate your solution to the edge at scale? How do you get “the bits to the boxes”?

Our company, like others, started facing this issue a while ago. Our clients wanted to know if we could help get our new solutions to the edge platforms. And once deployed, they wanted to know how to monitor the applications and the platforms. Importantly, the management of these solutions has to be done at edge scale – which means thousands of platform nodes and hundreds of thousands of sensors/devices.

Perhaps like you, we originally looked to partner solutions to assist with this need. We have seen some solutions in this space evaporate – through acquisition or demise. Many attempted to take an enterprise deployment/orchestration solution and make it fit to work at the edge. Neither the architecture nor the business model for these products worked for the opportunity that exists at the edge.

Why edge applications?

A question you may be asking as part of this discussion is, “Why have an edge application at all?” Why not just have your sensors/devices connect into a cloud native application and avoid all this hassle? This is a fair question, and in some use case situations, this might be a viable solution. I call this kind of edge solution “sensor to cloud” if you have a sensor or device that can connect directly to the cloud, avoid the middleman (the edge compute node) and just send all the data home to the cloud. There are a few reasons this solution may not work and a few considerations you want to ponder before invoking such a solution.

First, your edge devices and sensors may not always have the connectivity needed to “phone home” directly to the cloud or your enterprise where you have those CNCF apps running. Legacy devices (think 1980s twisted pair Modbus device) or resource-constrained devices (think simple MCU or PLC) may not have the TCP stacks necessary to send data directly to the cloud. Or the connectivity may be intermittent. So, are you okay with windows of lost data due to lost connectivity? In these cases, you need the edge app to provide the connection to the cloud and serve as a store-and-forward apparatus when the data cannot be lost.

Second, do you have time to wait for your cloud or enterprise to provide an answer back down to the edge? The latency in a sensor-to-cloud solution may not be huge (seconds?), but in some use cases, it’s critical – time critical. You don’t want a decision about when to fire the air bag in your car done from the cloud. So, if latency and determinism are important elements in your edge use case, a sensor-to-cloud solution will not suffice. You need the decision-making app right there at the edge to fulfill those needs.

Finally, can you afford it? A sensor-to-cloud solution says you are going to haul all the data from the edge to your cloud, no matter how much it costs to ship it there (paying for connectivity), no matter how much it costs to warehouse it while you evaluate its worth (paying for storage), and no matter how much work is involved in sifting through all the data to find valuable information (paying for compute). Your edge applications can help save transportation, storage, and compute cloud costs by weeding out or consolidating the valuable data at the edge and shipping back only what would be needed by the enterprise.

It should be noted that there is a cost of writing edge applications, even beyond the edge management challenges as a focus of this article. Cloud native applications are written so they don’t really care or even know where they are. They are abstracted away from the actual hardware and fabric they live on so that they can be easily moved, scaled, and brought up or down quickly. Edge applications at the farthest extremes typically have to know where they are, what hardware and connectivity they are supporting and rarely, if ever, are scaled up or down.

So, if your “smart sensor” can make the connection, and if the latency concerns and data management costs are reasonable for your use case, bypassing both the edge application and edge management issues in favor of cloud native applications and application management can be a reasonable approach. However, if this is not the case, then the need for edge applications is compelling.

We also encounter organizations attempting to use Kubernetes (K8s) to solve their edge deployment/orchestration needs. K8s have become a juggernaut solution in the enterprise and cloud space. One of our customers jokingly said, “I don’t know what the problem is, but the solution is Kubernetes.” Why not just use it to address edge needs? While K8s (or at least some slimmed-down version of it, like K3s, MicroK8s, KubeEdge, etc.) may address some edge deployments, it is not often a perfect fit and may leave you still wanting a better solution. K8s solutions can be big, complex and not always suited to manage all parts of the edge solution.

Deploying and managing edge applications, especially at the “thin” edge, come with some unique requirements. So, what should you look for when searching for an edge management solution?

-

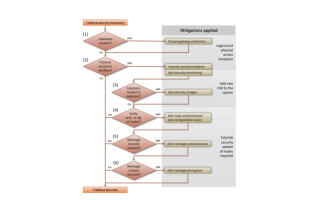

Edge platforms are resource constrained. Memory, CPU and network availability are in vast supply in your enterprise or cloud environments, but not so on all edge platforms. Look for solutions that can work in resource-constrained spaces. K8s, for example, requires a minimum of approximately 700MB of memory to work. The resource constraints, age of the nodes (operational technology systems have a lifespan averaging seven-plus years), or the nature and diversity of the edge nodes mean that you cannot always count on containerization or virtualization. Edge applications are going to sometimes run on “bare metal” and your deployment/orchestration/management solution must address this. By the way, if you are thinking to “just upgrade the edge” to support these solutions, the scale of the edge makes this very hard and very expensive. Look for solutions that are largely platform agnostic and that don’t require you replace your infrastructure.

-

Monitoring of the edge, both node and platform, is different than monitoring servers on an enterprise network. Given the scale, you often can’t afford to have all the telemetry reported to a central location and make any sense out of it. You need an edge management/monitoring solution that automatically detects issues and then increases the collection of information on troublesome nodes to pinpoint and solve problems. Edge monitoring solutions have to minimize polling and data exchange and maximize push reporting and alerts on issues.

-

Edge nodes are connected to sensors and devices that speak all sorts of interesting protocols and data formats. Deploying, orchestrating, onboarding/provisioning, and then monitoring the applications and this connectivity present unique challenges. The right edge applications will help, especially when onboarding sensors and devices. Also, look for management solutions that understand the true nature of the edge connectivity. You don’t want a management solution that can’t negotiate the security constraints, or see through a container or a virtual layer to the device connectivity layer of the platform.

-

Edge platforms often work where there is low, no, or intermittent connectivity. Edge management solutions must be able to work “on-prem” and completely remote from the internet for some use cases. Edge nodes, such as those on transportation vehicles, are going to have brief periods of connectivity (and that connectivity may be expensive). Look for edge management systems that can operate mostly independently at the edge and can briefly and cheaply take care of business when there is connectivity.

-

Edge nodes are often hard to get to. Their sheer numbers make it difficult to touch each one during a new application rollout. Look for solutions that offer some degree of zero-touch or low-touch approach to on-boarding and provisioning. This often requires the nodes to do more by way of reporting their presence and self-bootstrapping.

-

Finally, make sure you explore edge management solution pricing and make sure it fits your budget. Again, many edge management solutions have grown from enterprise management solutions. The pricing model often reflects the product origins. Look for an edge management solution that is priced to edge scale. Charging per CPU, number of connected sensors/devices (things) under management, or amount of data transmitted from the edge to the back end can lead to unaffordable solutions. Do your homework to understand what you will have under management. Do the math and make sure the pricing also fits your requirements.

These points serve as an important guide. Not all of them may be applicable to your use case and deployment situation. The unique challenges of edge management and the absence of truly edge-specific solutions have prompted a great deal of development activity, including a number of serious open-source initiatives that are starting to emerge in this area.

What to explore

What are some of the open-source edge management solutions to consider? As mentioned, this is still an up-and-coming field. You might experience some growing pains as these solutions evolve and take shape or find that the solution doesn’t meet all of your needs.

Edge Software Hub – a collection of edge software packages created by Intel that include elements such as Edge Software Installer (formerly Retail Node Installer). It automates the installation of a complete operating system and software stack (defined by a Profile) on bare-metal or virtual machines, using a "Just-in-Time" provisioning process.

Project Eve – one of the many open-source projects under LF Edge. Eve is an open edge computing engine that enables the development, orchestration and security of cloud-native and legacy applications on distributed edge compute nodes. Supporting containers and clusters (Dockers and Kubernetes), virtual machines and unikernels, Project EVE aims to provide a flexible foundation for IoT edge deployments.

Open Horizon – another project under LF Edge that was initiated by IBM. Open Horizon is a platform for managing the service software lifecycle of containerized workloads and related machine-learning assets. It enables autonomous management of applications deployed to distributed webscale fleets of edge computing nodes and devices without requiring on-premise administrators.

At IOTech, we have been developing a new edge management solution called Edge Builder. We believe that Edge Builder will help address the considerations described previously while incorporating many open-source tools and technology including some of the above. We recently unveiled the first version of the product for customers to begin exploring pilot and proof-of-concept projects. An updated version of the product will be available around April 2021. Initially focused on application deployment and orchestration, the company is also working toward managing and monitoring the edge nodes themselves. Using open-source technology at the base, IOTech is working to create a platform agnostic, edge-centric, and affordable edge management solution to complement our existing edge applications.

Edge management is a challenge that is starting to receive serious industry attention and there are plenty of good things to come in this area, so stay tuned.